|

||||

|

|

|||

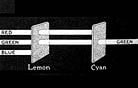

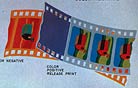

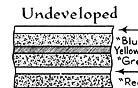

Television, on the other hand, can cause imaging experts to reach for the Advil. There is more than one color space. No two cameras match exactly. A host of intermediate equipment and personnel, broadcast nuances, different phosphors on televisions and network politics all come into play. Television has a complicated and tangled history, and this article doesn’t have the bandwidth to explain it all. Nevertheless, I will attempt a brief overview: in 1884, the brilliant inventor Nikola Tesla came to the U.S. and went to work getting the bugs out of Edison’s DC (direct current) system of electricity. (A major drawback of the DC system is that the longer electricity has to travel, the more the voltage drops.) After Edison gave Tesla the shaft, the Croatian immigrant devised the AC (alternating current) system, which allowed electricity to travel great distances by wire. Tesla chose a 60Hz cycle because anything lower than that introduced flicker into streetlamps. In 1888, inventor George Westinghouse signed Tesla to a contract, and despite the Edison group’s best attempts to discredit Tesla and the system — which caused Edison lose a potential monetary windfall in the millions — Westinghouse managed to install the AC system in the U.S. (Edison’s smear campaign did keep Tesla from taking credit for many innovations for decades.) In the ensuing years, a number of other battles emerged in the field of television: Philo Farnsworth vs. Vladmir Zworykin and RCA Victor; DuMont Laboratories vs. General Electric v. RCA-NBC — the list goes on. In the early 1930s, the British Broadcasting Company kick-started the U.S. drive toward TV with a short-range broadcast to about 1,000 test subjects. Those in the U.S., with their wait-and-see attitude, realized how behind they were. Because flicker needed to be reduced, both Farnsworth and Zworykin agreed that a minimum of 40 pictures per second were necessary (a standard that they adapted from the motion-picture industry, in which each frame was projected twice for 48 fps). The required bandwidth for such a signal was greater than the technology allowed. In came interlace, which split a picture into two alternating fields, and a rate of 30 frames/60 fields per second was selected to match the screen refresh rate to the 60Hz power source. This figure also fit within the restriction of the bandwidth available at the time and didn’t reduce spatial resolution. The original 343-line process was upped to 441 lines in the mid-Thirties, then to 525 lines in 1941 for black-and-white by the year-old National Television System Committee (NTSC), but picture size was locked from the get-go at 4x3 to mimic the cinematic 1.33:1 aspect ratio at the time. Color was inevitable. After a few years of manufacturer skirmishes, the NTSC adopted the CBS field-sequential system in December of 1953, but manufacturers and broadcasters clamored for compatibility with their current black-and-white TV sets — which meant that a color signal had to be shoehorned into the bandwidth space of the black-and-white transmission signal. Bandwidth was tight because TV’s “wise men” thought that 13 channels would be plenty. Color information for television is based on red, green and blue; therefore, three signals are needed. Engineers added two to the black-and-white composite signal, using the existing black-and-white signal (the luminance) as the third. This is modeled in the YIQ color space, where Y = +30%red +59%green +11blue, I= 60%red -28%green -32%blue, and Q= +21%red -52%green +31%blue. In the conversion from YIQ to RGB at the television set, I and Q are compared to Y and the differences that result are converted into three channels (red, green, blue) while Y still serves its original luminance function. So, television requires a color-space transform from RGB camera pickup tubes to YIQ for transmission and vice versa at the television set. At the 30Hz rate, I and Q encoded color information into a color subcarrier positioned in previously unused areas, or side bands, of the black-and-white composite signal. Harmonics from encoded audio subcarrier that resided in close proximity caused interference in the color signals degrading image quality. As a fix, the frame rate was lowered to 29.97Hz to put the color out of phase with audio, eliminating interference. The color signals’ amplitudes determined color saturation, and their phases in relation to the phase of the colorburst (a sinusoidal reference signal for each scanline) determined hue. The first coast-to-coast color broadcast featured the Tournament of Roses Parade on January 1, 1954. However, Michigan State’s 28-20 Rose Bowl victory over UCLA was not shown in color. NTSC color does have its faults. The encoding process causes some overlapping, or mixing of information, among the three signals, and no filter can separate and reconstruct the three signals perfectly, impairing quality (see diagram). Also, high-frequency phase-dependent color signals require timing tolerances that are difficult to maintain. That’s why the color and image integrity of an NTSC signal degrades significantly with each generation removed from the original. Despite these faults, NTSC actually has decent color rendition, but there are compromises when it comes to the television sets. A major problem lies in cabling: over distance, the nature of the NTSC color signal does not allow it to maintain phase integrity very well, so by the time it reaches the viewer, color balance is lost (which is why technological wags often deride NTSC with the phrases “Never The Same Color” or “Never Twice the Same Color”). Because of this problem, NTSC necessitates a tint control on television sets (see diagram). The European systems PAL and SECAM have no need for a tint control. PAL, or “phase alternating line,” reverses the phase of the color information with each line, which automatically corrects phase errors in the transmission by canceling them out. PAL exists in a YUV color space, where Y is luminance and U and V are the chrominance components. SECAM (“sequential couleurs a memoir,” or “sequential, with memory”) was developed in France because the French like to do things differently. Functioning in a YDbDr color space, where Db and Dr are the blue and red color differences, SECAM uses frequency modulation to encode the two chrominance components and sends out one at a time, using the information about the other color stored from the preceding line. PAL and SECAM are 625-line, 50-field systems, so there can be a bit of flicker. In the 19th century, the German company AEG selected the less-efficient 50Hz frequency for their first electrical generating facility because 60 did not fit the metric scale. Because AEG had a virtual monopoly on electricity, the standard spread throughout the continent. The ultimate destinations for broadcast images are television sets, which use cathode-ray tubes (CRTs) to fire red, green and blue electron beams at the back of a screen coated with red, green and blue phosphors. Therefore, no matter what the system’s color space, it must once again be transformed into RGB at the television. TVs have limited brightness and can reproduce saturated colors only at a high brightness level. Green is saturated naturally at a low brightness — this is where one of those NTSC compromises comes in. The contrast range of typical motion-picture projection has a sequential (best-case) contrast ratio of about 2,000:1, but it can be more than 50,000:1 with Vision Premier print stock in a good theater. A professional high-definition CRT broadcast monitor is typically set up with a sequential contrast ratio of about 5,000:1. An early standard-definition CRT television set’s contrast ratio is very low. Today’s are much higher due to phosphor and CRT improvements. High brightness and saturated colors give the appearance of good contrast. The TV also must compete with ambient lighting, however dim, in the TV room. So, when you get your new TV home and turn it on, the manufacturer has (incorrectly) set the brightness to “nuclear.” |

|

|||

|

<< previous || next >> |

||||

|

|

|

|

|

|