|

||||

|

|

|||

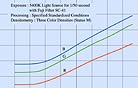

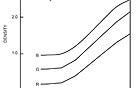

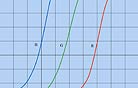

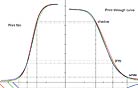

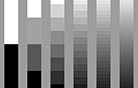

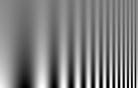

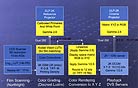

Composite 8-bit D-2 was a replacement for 1" analog tape. It functioned under SMPTE 244M, the recommended practice for bit-parallel digital interface, and was susceptible to contouring artifacts. D-3 was a composite 8-bit videotape recording format using 1⁄2" tape. Because these digital formats were being developed in Japan, D-4 was skipped. (Japanese culture has its superstitions, and the characters for “four” and “death” are both pronounced in Japanese as shi — an ominous association.) D-5 is a common post facility format today because it can record uncompressed Rec 601 component digital video at up to 10-bit depth. With compression of 4:1, it can record 60p signals at 1280x720p and 24p at 1920x1080p resolutions in 4:2:2. D-6 is a high-definition format better suited to storage because of cost and size but not widely used; it records uncompressed in the ITU-R BT.709 (Rec 709) high-definition standard at full-bandwidth 4:4:4 chroma. D-7 serves Panasonic’s DVCPro standards: 480i 4:1:1 DVCPro with 5:1 compression, 480i 4:2:2 DVCPro50 with 3.3:1 compression, and 1080i 4:2:2 DVCPro HD at 6.7:1 compression (see diagram). These varying numbers beg the question: What qualifies as high definition? Really, high definition is any format that is even the slightest bit better than the component standard-definition resolution. This has allowed the “hi-def” label to be tossed around rather haphazardly. 1998 marked a turning point, when two teenagers were sucked through their television into the black-and-white world of a 1950s-style TV show, and their presence added a little “color” to the environs. Pleasantville (AC Nov. ’98) was pure fantasy, but the isolation of colors against black-and-white backgrounds was not. The color footage shot by John Lindley, ASC underwent a Philips Spirit DataCine 2K scan at 1920x1440 resolution (which actually is not full 2K resolution). The data files then were handed over to visual-effects artists who selectively desaturated areas of a given shot. Kodak’s digital laboratory, Cinesite, output the files to a color intermediate stock via a Kodak Lightning recorder. Nearly 1,700 shots were handled in this manner, a very time-consuming visual-effects process. In telecine, isolating an object or color and desaturating the background is a relatively simple task. Looking at what was done in Pleasantville and what could be done in telecine, filmmakers and industry engineers began putting two and two together; they realized that film images could be converted into digital files, manipulated via telecine-type tools, and then output back to film. This concept, pioneered by Kodak, became known as the digital-intermediate process, which replaces the traditional photochemical intermediate and color-timing steps. Of great benefit is the ability to skip the image-degrading optical blowup for Super 35mm footage, which can now be unsqueezed in a computer with no loss of resolution. Many in the industry point to the Coen brothers’ 2000 feature O Brother, Where Art Thou?, shot by Roger Deakins, ASC, BSC, as the first commercial film to undergo a true DI. Deakins was well aware of Pleasantville, and therefore knew that a DI was the only way he could achieve a highly selective, hand-painted postcard look for the Depression-era film without every shot being a visual effect. With the DI still in its less-than-ideal infancy, O Brother was a learning process for all involved, including Cinesite, where a workflow was created somewhat on the fly. Footage was scanned on the DataCine, and the loss of definition from the pseudo-2K scan worked in the film’s favor, further helping to mimic the era’s look. After spending 10 weeks helping to color-grade the film, Deakins said he was impressed with the new technology’s potential, but remarked in the October 2000 issue of American Cinematographer that “the process is not a quick fix for bad lighting or poor photography.” (This quote can stay in the present tense, of course, because it will be relevant in perpetuity.) While Deakins was sowing DI seeds at Cinesite, Australian director Peter Jackson decided to tackle the Lord of the Rings trilogy — all at once. Maintaining consistency in the look of three films, each well over three hours long with extensive visual effects, was a Mount Doom-sized task. Colorist Peter Doyle and cinematographer Andrew Lesnie, ASC, ACS sought the help of a little company in Budapest called Colorfront. In a nutshell, the duo asked Colorfront to build, to their specifications, a hardware/software digital-grading system that had typical telecine tools, but could be calibrated for any lab and could emulate the printer-light system of traditional photochemical color timing. In integrating the beta system into the workflow, Doyle discovered that the color management of electronic displays quickly became an issue. “At the time, digital projectors just simply were not good enough,” he recalls. “We stayed with using CRTs, but just in getting the monitor to match the print, there was the discussion of defining what we wanted the monitor to really be. Obviously, we had to deviate away from a perfect technical representation of a film print.” In piecing together a workflow, the filmmakers struck upon a clever idea. To ease the amount of work later in the DI chain, the voluminous number of plates shot for the digital effects were pregraded before they went to the visual-effects artists. This practice has gained in popularity and will soon be standard operating procedure in all workflows. Lesnie picked up an Academy Award statuette for The Fellowship of the Ring (AC Dec. ’01), and Colorfront teamed up with U.K.-based 5D to release the beta system commercially as 5D Colossus. Colossus, which was later purchased and improved by Discreet then re-released as Lustre, unleashed a flood of color-grading and color-management tools upon the film industry. This flow of products shows no sign of abating, and, as in the computer industry, updates are unremitting and obsolescence is an ever-present factor. For evidence of this, just take a look around the exposition floor at the annual National Association of Broadcasters Conference in Las Vegas. With the lack of viable standards, the technologies introduced are often proprietary or do not play well with others in the digital-imaging arena. Could you put 10 basketball players from around the world on a court, each wearing a different uniform and speaking a different language, and expect an organized game? Not without coaches and decent translators. Who better to coach digital imaging than those who have the most experience with creating high-quality visuals: i.e., the members of the ASC Technology Committee? Many factors are involved in navigating the hybrid film/digital-imaging environment, and to discuss each of them comprehensively would turn this article into a sizable textbook of hieroglyphic equations found in the higher collegiate math courses that I either slept through or skipped altogether. This article isn’t even an overview, something that also would be textbook-sized. Rather, I will analyze some key elements that affect workflow choices, because no two workflows are currently the same; each is tailored specifically to a particular project. (The Technology Committee is currently producing a “digital primer,” an overview of all things applicable to the digital workflow. Committee member Marty Ollstein of Crystal Image has been given the responsibility of crafting the document, which will include conclusions drawn from the important work the committee has been pursuing diligently for the past two years. The primer will become a “living” document that will be updated routinely to reflect both technological advances and product developments, along with the results of the committee’s best-practice recommendations.) Despite decades of Paul Revere-like cries of “Film is dead!”, celluloid is still the dominant recording and presentation medium. Digital, for reasons mentioned earlier, is fast becoming the dominant intermediate medium. Thus, the typical analog-digital-analog hybrid workflow comprises shooting on film, manipulating the images in the digital realm, and then recording back to film for display and archiving purposes (see diagram). Let’s begin with analog camera negative as the recording medium. As of now, there is no proven digital equal to the dynamic range of camera negative. The negative records as close to an actual representation of the scene we place before it as the emulsion allows, but it is not necessarily how we see things. Film does not take into account differences in human perception, nor our eyes’ ability to adjust white point, as discussed in Part One of this article. A film’s sensitometric curve is plotted on a graph where the Y axis is density and the X axis is log exposure. The curve determines the change in density on the film for a given change in exposure. The uppermost portion of the curve that trails off horizontally is known as the shoulder, and this is where the negative is reaching maximum density (see diagram). In other words, the more light reacts with the emulsion, the denser it gets, and, of course, a lot of light will yield highlights. What?! Remember, this is a negative working material that is making an inverse recording of a scene, so on your negative, bright white clouds will be almost black, and when positively printed, the clouds become a light highlight area. The bottom portion of the curve that also trails off horizontally is known as the toe. Density is lighter here from less light exposure, giving you shadows and blacks. The endpoints of the toe and shoulder where the curve flattens to constant densities are known as D-min and D-max, respectively. Measuring the slope of the curve between two points of the straight-line portion on negative film typically will yield the number .6 or close to it, depending on the stock, and this is called gamma (see diagram). (There is no unit of measurement for this number per se, much like exposure stops aren’t accompanied by one. They are actually ratios, density change to log-exposure change.) Now, measure the density difference between D-min and D-max, and you end up with that negative’s maximum contrast range. |

|

|||

|

<< previous || next >> |

||||

|

|

|

|

|

|