|

||||

|

|

|||

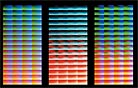

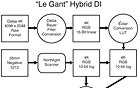

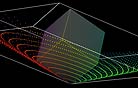

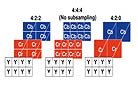

The digital image files with these LUTs applied were recorded to print film via an Arrilaser (see diagram). Had The Aviator undergone a digital cinema release, those LUTs could not have been placed in every digital projector throughout the world, so Pines would have had to do some digital “cooking.” “They will not let me control the [digital] projectors in the theaters,” he reveals, “so I would've had to ‘bake in’ that film look through the mastering process.” Having a look “baked in” means that the file has been changed and saved to permanently reflect that look. It is a way to protect the integrity of the image, because the digital environment opens the process up to meddling by other hands. Though the LUT is doing the processing, the human element certainly isn’t removed from the process. “We run it through this LUT and get HD video, and we run it through that LUT and get standard definition — it doesn’t work that way,” contends Levinson. “You still need a fair amount of human invention, and that is why we have people trained in color correction doing the scanning. We have the people who will be responsible for the DI color correction overseeing the trimming of the color for some of the other target deliveries.” The hybrid digital workflow does not have to start with film origination. High-end digital video cameras, such as Sony’s HDW-F900 CineAlta and HDW-F950 (which has data-only output capability) and Panasonic’s VariCam, have been around for several years and record in the Rec 709 high-definition RGB color space. However, they record compressed signals to tape: Sony in 8-bit to heavily compressed (upwards of 7-10:1) HDCam or, in the case of the F950, lesser 2-4:1 compression for 10-bit to HDCam SR, depending on the chroma sampling and the specific recording deck; and Panasonic to heavily compressed DVCPro HD. “Pandora’s Box is open,” says David Stump, ASC, chair of the Digital Camera subcommittee. “The future is going to be digital somehow, some way, and will at least include a hybrid of film and digital cameras. However, there is no reason to give up a perfectly good toolset for one that does less. As a cinematographer, you can always raise an eyebrow by suggesting compression. The bad kinds of compression create sampling errors and the okay compressions use a little less disk or tape space.” There are two distinctions in compression, lossy or lossless, and they both involve higher math algorithms to shrink a file’s size to make it more manageable and transportable. No sense in boring you with these algorithm details. (Really, I chose to play flag football that day rather than go to class.) Lossy can be of any factor of compression – 10:1, 50:1, 100:1, etc.; the algorithm used causes the loss by throwing out what it calculates to be unnoticeable by the viewer Chroma sub-sampling is a form of lossy compression. To achieve losslessness, generally only a small amount of compression is applied, but this doesn’t shrink the file size much (see diagram). The earlier compression is applied within the workflow, the more its errors compound. Errors show up as squares of color rather than picture detail. If compression is necessary, it is best to apply it as close to the last stage of the workflow as possible. Remarks Kennel, “From an image-quality standpoint, it’s risky to apply compression up front in the process, whether it’s in the camera footage or in the image being used in the visual effects compositing process. It’s better to stay with the whole content of the image while you are twisting, stretching, color correcting and manipulating the image. If you apply compression up front and you decide to stretch the contrast or bring detail out of the black during a color-correction session, you may start seeing artifacts that weren’t visible in the original image. I think compression is a good enabler and cost reducer on the distribution side, just not a good thing up front.” An algorithm does not necessarily have to be applied to achieve a compressed image file. Compression can occur by the very nature of the workflow itself. The StEM image files were 6K 16-bit TIFF files but were unsqueezed from the original anamorphic camera negative and down-converted to 4K for sharpening. They were then downsampled to 1K 10-bit files for color correcting as proxies on a Lustre. After grading, the files were converted again to 12-bit files in XYZ color space. During the first pass of the material through the workflow, those 12-bit files were being pushed through 10-bit-capable pipelines to a digital projector, meaning 2 bits were lost as the pipeline truncated the files for display purposes. However, the end display and film-out used all 12 bits. Manufacturers such as Arri (D-20), Panavision (Genesis), Dalsa (Origin) and Thomson (Viper) have developed so-called “data cameras” that can avoid video color space (see diagram). They record uncompressed to hard-disk storage and have better image quality than HD — 4:4:4 chroma sampling, so no short-shrifting subsamples, wider latitude, 10-bit or higher color bit depth and resolution close to 2K and higher. Dalsa claims 4K x 2K and at least 12 linear stops for the Origin and has gone so far as to offer workflow support through post. And Thomson’s Viper, for example, can capture in raw mode, meaning the picture won’t be pretty but will have the most picture information at your disposal in post for possibly endless manipulation. Michael Mann’s recent film Collateral (AC Aug. ’04), shot by Paul Cameron and Dion Beebe, ASC, ACS, made excellent use of digital-video and digital-data camera technology, in addition to shooting traditional Super 35 film elements that were down-converted to Rec 709 color space to match the predominant high-definition footage (see diagram). Basically, Rec 709 served as the lowest common denominator among the formats. Director of photography Sean Fairburn believes that waiting until post to decide on an image’s look — for example, when shooting in the raw format — will lead to a loss of image control for the cinematographer. “If we train a new generation of directors of photography who can’t decide whether to put a filter on the lens or on in post,” he asserts, “then maybe the colorist or the director might choose a different filter. I am the one on the set that looks at an actress’s dress and says, ‘This is what it is supposed to look like.’ The colorist doesn’t know that the teal dress was actually teal. If the film or the electronic medium slightly shifts, he is not going to know that it needs to be pulled back to teal. I am the guardian over this image. If I can capture an image that looks closer to where I want to be, then my colorist, my visual-effects producer and whoever else is down range already sees where I am going. Let’s defer very expensive decisions until later on? This isn’t that kind of job.” Fairburn has touched on an unavoidable problem in postproduction: the differences in human perception that were discussed in Part One of this article. The more people involved in making judgment calls, the more your image could drift away from its intended look. The raw format, and digital in general, does offer more manipulation possibilities in post, but creating a look from the ground up takes time, something that the postproduction schedule is usually lacking. And more hands are involved in the post process. No matter what manipulation an image goes through, the file inevitably must undergo a color— space conversion, sometimes several, during the workflow. Upon scanning, the colors on the negative typically are encoded in some form of RGB density. The look may be adjusted either in a look management system or a color corrector, sometimes in density (log) space or sometimes in video space. The colors may be converted to scene space for visual-effects work. Another color conversion may happen when preparing the final version for output to film or digital cinema. SMPTE DC28 is settling on conversion to CIE XYZ color space for the digital distribution master (DCDM) because it can encode a much wider color gamut than those of monitors, projectors and print film, allowing room for better display technologies in the future (see diagram). “Every transfer from one color space to another color space, even if it’s the same color space, runs risks of sampling errors,” says Stump. “By re-sampling to any other color space, especially a smaller space, you can concatenate (link together) errors into your data that then cannot be reversed. They cannot be corrected by expanding that data back into a bigger color space. Some people will argue that you can correct the errors using large mathematical formulas, but I don’t think that is error correction. I think that is error masking. That’s a lot of work to correct an error that didn’t have to be created in the first place. On the Cinematographers Mailing List, I read something that was well said: ‘You should aspire to the highest-quality acquisition that you can afford.’ There are numerous hidden pitfalls in post.” Displays, whether monitors or digital projectors, are the driving force behind color-space transformation and LUT creation. They function in device-dependent RGB color space. In digital projection, Texas Instruments’ DLP (Digital Light Processing) and JVC’s D-ILA (Digital Direct Drive Image Light Amplifier) are the two technologies currently in use. DLP, used in Barco and Christie projectors, for example, has an optical semiconductor known as the Digital Micromirror Device (DMD) that contains an array of up to 2.2 million hinge-mounted microscopic mirrors. These mirrors either tilt toward the light source (on) or away from it (off). For the 3-DMD chip system, the cinema projector splits the white light into RGB and has three separate channels – red, green and blue. Each chip is driven with 16 bits of linear luminance precision resulting in 248 = 281 trillion unique color possibilites. Resolution is 2048x1080. D-ILA, a variant of LCOS (Liquid Crystal on Silicon), utilizes a 1.3" CMOS chip that has a light-modulating liquid crystal layer. The chip can resolve up to 2048x1536 pixels – 2K resolution. DLP has the most installations in theaters and post facilities. JVC was the first to introduce a 4K (3860x2048) D-ILA projector. Sony recently has begun showing their 4K 4096x2160 digital projector that uses Silicon X-tal Reflective Display (SXRD) technology. Contrast is stated as “high.” I’m probably not going out on a limb by saying that image quality on earlier 1K projectors was lwss than ideal — low contrast, obvious low resolution, a visible screen-door effect from the fixed matrix, and annoying background crawl on panning shots. The consensus on the 2K DLP projectors of today is that sequential contrast, roughly 1,800:1 (D-ILA has less) in a typical viewing environment, is approaching the appearance of a Kodak Vision release print. Vision print stock actually has a density contrast ratio of 8,000:1, or 13 stops, but the projection booth’s port glass, ambient light and light scatter caused by reflection reduce it to a little over 2,000:1. The more expensive Vision Premier print stock has a contrast ratio of about 250,000:1, or 18 stops to the power of 2. (Humans can distinguish 30 stops.) The chosen light source for digital projection is xenon, which has a slightly green-biased white point. Shown as the “tolerance box” in this diagram. “Xenon produces a pretty broad spectrum white,” explains Cowan, “but included in that white is infrared and ultraviolet. One of the things you have to do to avoid damage to optical elements within the system is filter out the IR and UV. Along with the IR and UV, you end up filtering a little of the red and the blue but leaving all the green. The green component accounts for about 70 percent of the brightness.” |

|

|||

|

<< previous || next >> |

||||

|

|

|

|

|

|