Smart Lenses

It’s not the future of technology anymore. It’s here now, and it can help your production in many ways!

What is a “smart lens,” and how can it improve your life?

A smart lens might not be able to talk to your Google Assistant or your Amazon Alexa and place your favorite coffee order for you (which is a shame), but it can talk to your Arri Alexa (or Red or Sony) and relay a wealth of critical information about the lens to the camera or external recording device.

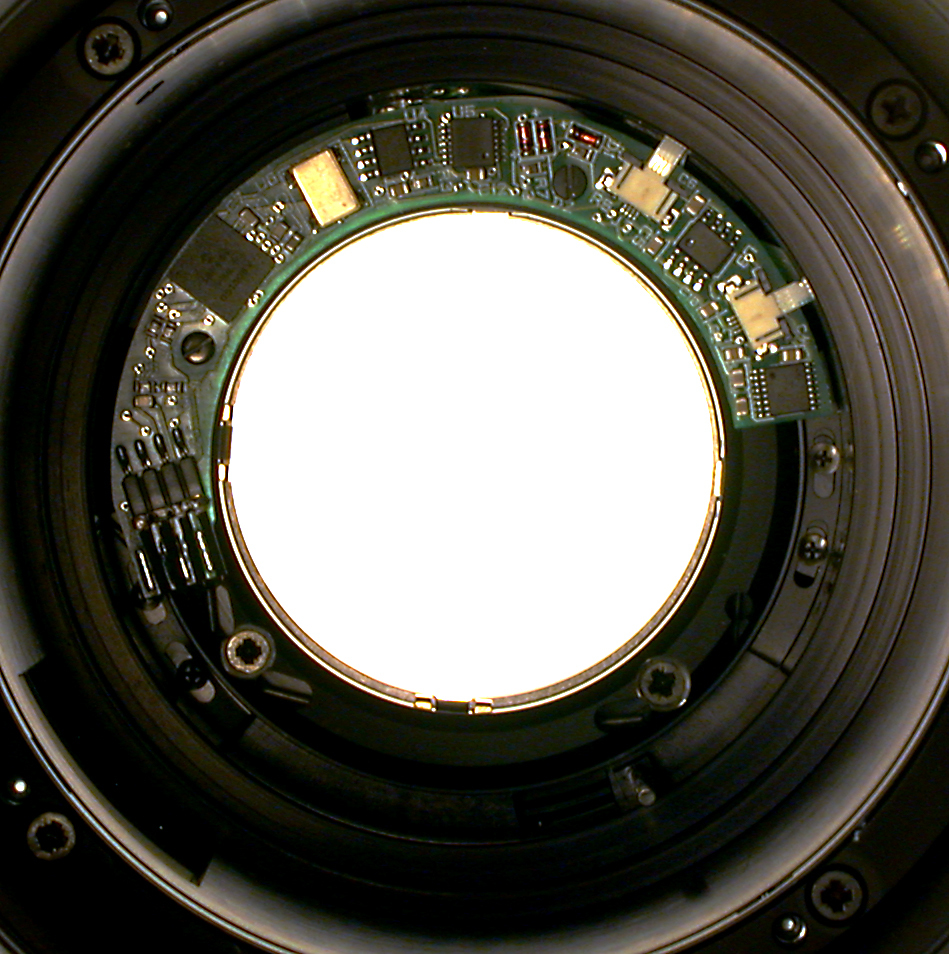

Smart lenses incorporate computer chips and encoders to report metadata about the lens’ status, which includes the make and model of the lens, the serial number, the focal length (which is dynamic if it’s a zoom lens), the aperture, the focus setting, and more. All of this information is recorded at a rate of at least every frame.

Why is this metadata important? Generally, it’s crucial for visual-effects artists who are altering or adding to the images recorded by the camera during production.

In the old-fashioned methodology, the camera team notes every lens, focal distance and aperture in a log on a shot-by-shot basis. The unit script supervisor generally records these details as well. These logs are passed along (ideally) to editorial and then (again, ideally) to the visual-effects teams, who are often scattered across the globe. However, in the heat of production, the camera assistant might have been distracted and neglected to record the information, or perhaps the script supervisor missed a lens change that happened at the last minute. In those cases, the records are inaccurate, confusing and contradictory, and it’s up to the visual-effects team to attempt to analyze the image and try to determine the correct focal length, focal distance and aperture in order to integrate their elements into the image. When they guess wrong, the image never looks right.

Enter metadata. The smart lens reports its data in a dynamic fashion per frame to the camera (or external recording device) and reports exact focal distance, fractional aperture, real focal length (which often differs from the rounded focal length that is printed on the barrel), and even the serial number of the lens if there are any problems. When the system is working properly, this data is passed to the camera and then carried — married to the footage in a perfect scenario, or available in a “sidecar” file — into postproduction, where it is of incredible value to the visual-effects artists.

Additionally, the metadata can be fed into camera accessories during production to remotely report the lens’ exact focus, aperture and zoom to handheld focus devices — eliminating the need to calibrate each lens and focus ring, and providing the most accurate readout of focus setting and depth-of-field approximations for a particular lens, aperture and focal-distance setting.

Lens metadata was introduced when entrepreneur Les Zellan bought the ailing Cooke Optics and decided to continue the legacy line into the new millennium. He envisioned a world in which metadata would be helpful, and began to implement the concept into Cooke lenses, starting with the S4/i series in 2005. (The /i stands for “intelligent” and is the Cooke trademark for this technology.)

Simultaneous to this development, Arri was working on its own lens-metadata system: the Lens Data System (LDS), introduced around 2001 in the Arri/Zeiss Ultra Primes. The two systems offer nearly identical data but in slightly different ways. Unlike Arri, Cooke only manufactures lenses, so it can only provide a lens that sends its information out; whether a camera actually listens to that data and records it, and whether the system protects that information as it moves through the various stages of post, are a whole ’nother can of beans. Because Arri manufactures lenses, cameras and accessories, it can fully integrate lens metadata into camera systems and accessories.

Both companies offer their technologies to other camera and lens manufacturers. Arri requires a hefty license fee for the integration of the LDS system, whereas Cooke charges a mere £1 per annual license. As so much of this business is driven by economy, it should be no surprise that the less-expensive Cooke system is more widely adopted. Cooke /i technology is incorporated into Angénieux, Fujifilm, IB/E, Leitz, Panavision, Servicevision, Sigma and Zeiss lenses — as well as into Sony, Panasonic and Red cameras. Arri cameras also read and support Cooke /i metadata, while only Arri lenses incorporate LDS. (This includes Arri/Zeiss and Arri/Fujinon lenses.) Because Arri also manufactures accessories for its LDS system, you can use a standard (non-smart) lens with Arri LDS-encoded motors and capture metadata from a vintage lens.

With the introduction of the Zeiss CP.3 lens series, Zeiss took Cooke /i metadata a step further. Within the /i protocol, there are additional “user-identified” columns of data that Cooke has left open. The engineers at Zeiss decided to utilize these open columns to provide geometric distortion and vignetting (shading) information for each lens on a dynamic, frame-by-frame basis.

This is an extraordinary leap forward in smart-lens technology, given that in preproduction — and even during production — the visual-effects team is tasked with “mapping” each lens used by the production by photographing checkerboard charts to record the lens’ unique distortions and aberrations. These are key to being able to integrate elements into the scene later. Every lens must be mapped, typically at multiple focus distances — and if it’s a zoom, at multiple focal lengths and multiple focus distances. It’s an extremely time-consuming process. With distortion and vignetting information already built into the lens, there is no need to map every lens — they’re already mapped!

Zeiss integrated this technology into the CP.3 XD series of primes, as well as the Supreme Primes.

Simultaneous to Zeiss’ efforts, Cooke was developing the /i3 update to the technology, which also includes distortion and vignetting information as standard features. This is built into newer models of the Cooke S7/ilenses.

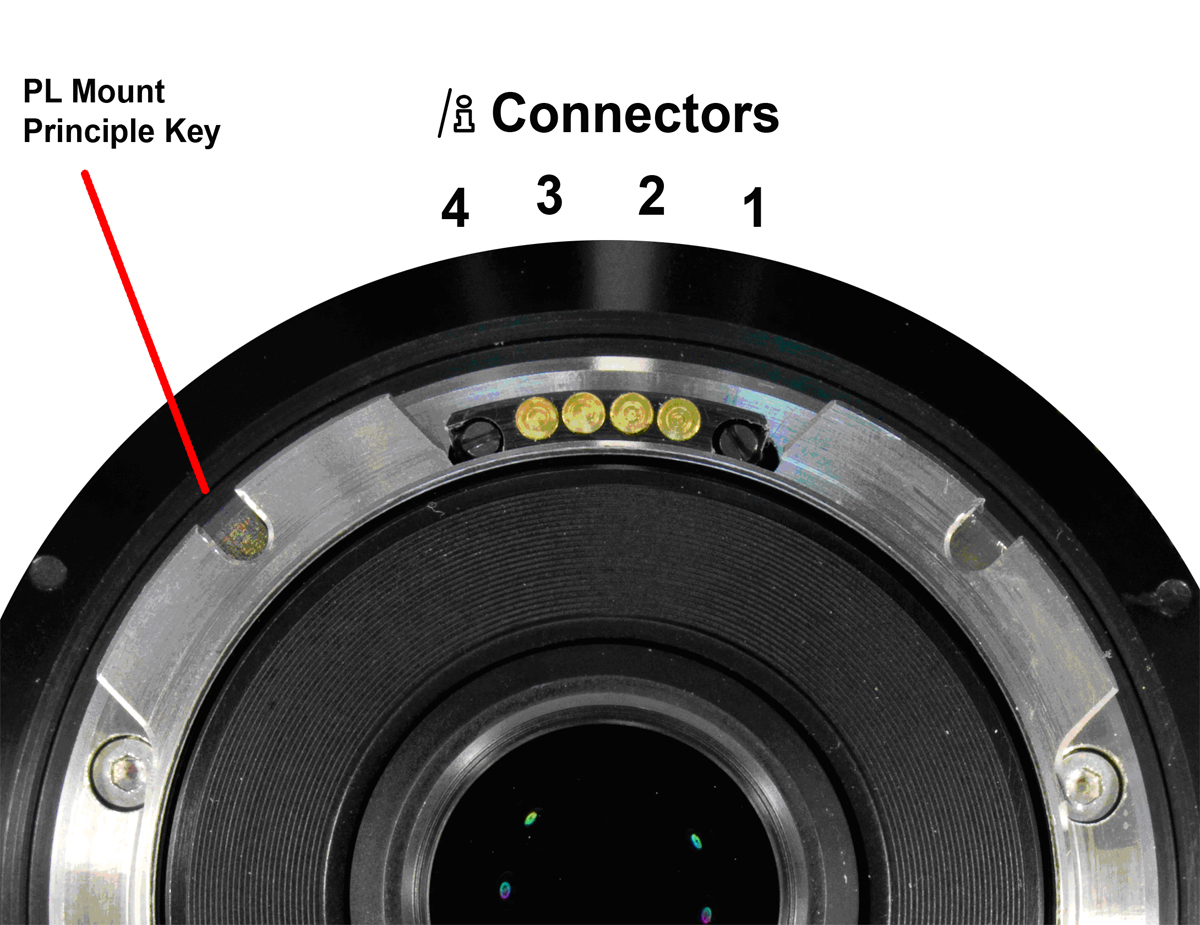

The Arri LDS system is all internal — no external recorders necessary. Cooke /i offers an external port on its lenses for situations where the camera does not see or record the data. In both cases, the lenses are powered and communicate primarily through four pins on the PL lens mount. The Cooke /i pins are at the top of the mount at the 12:00 position, and the Arri LDS comprises four pins at the 3:00 position. Lenses that integrate both communication systems will have eight pins, four in each location.

Although lens metadata has been available for nearly two decades, its adoption in the production and postproduction pipeline has been slow, mostly because user demand hasn’t been high. That is changing, however. One step toward that change is increasing awareness of the existence of lens metadata and having camera assistants, cinematographers and visual-effects artists demand that their cameras and post software effectively capture and transport metadata through the entire workflow. Three committees of the ASC’s Motion Imaging Technology Council (MITC) — the Lens Committee, the Motion Imaging Workflow Committee’s Advanced Data Management Subcommittee, and the Metadata Committee — are all working hard to inform and motivate the business to make metadata a streamlined part of everyday production. It’s not the future of technology anymore. It’s here now, and it can help your production in many ways!

Jay Holben is an ASC associate member and AC’s technical editor.

You’ll find all Shot Craft posts here.