Long-Range Lighting for Oppenheimer

The film’s lighting-console programmer details his system, noteworthy for its accessibility as well as its capabilities.

The long-range wireless lighting system Hoyte van Hoytema, ASC, FSF, NSC and lighting-console programmer Noah B. Shain first deployed on Jordan Peele’s Nope and then refined on Christopher Nolan’s Oppenheimer (AC Oct. ’23) is noteworthy for its accessibility as well as its capabilities.

Shain, who designed the system, recalls that van Hoytema’s request was for a robust, flexible solution that had no bespoke components.

AC asked Shain to describe the system and its application on Nolan’s film. What follows are his words.

AirMax System Architecture

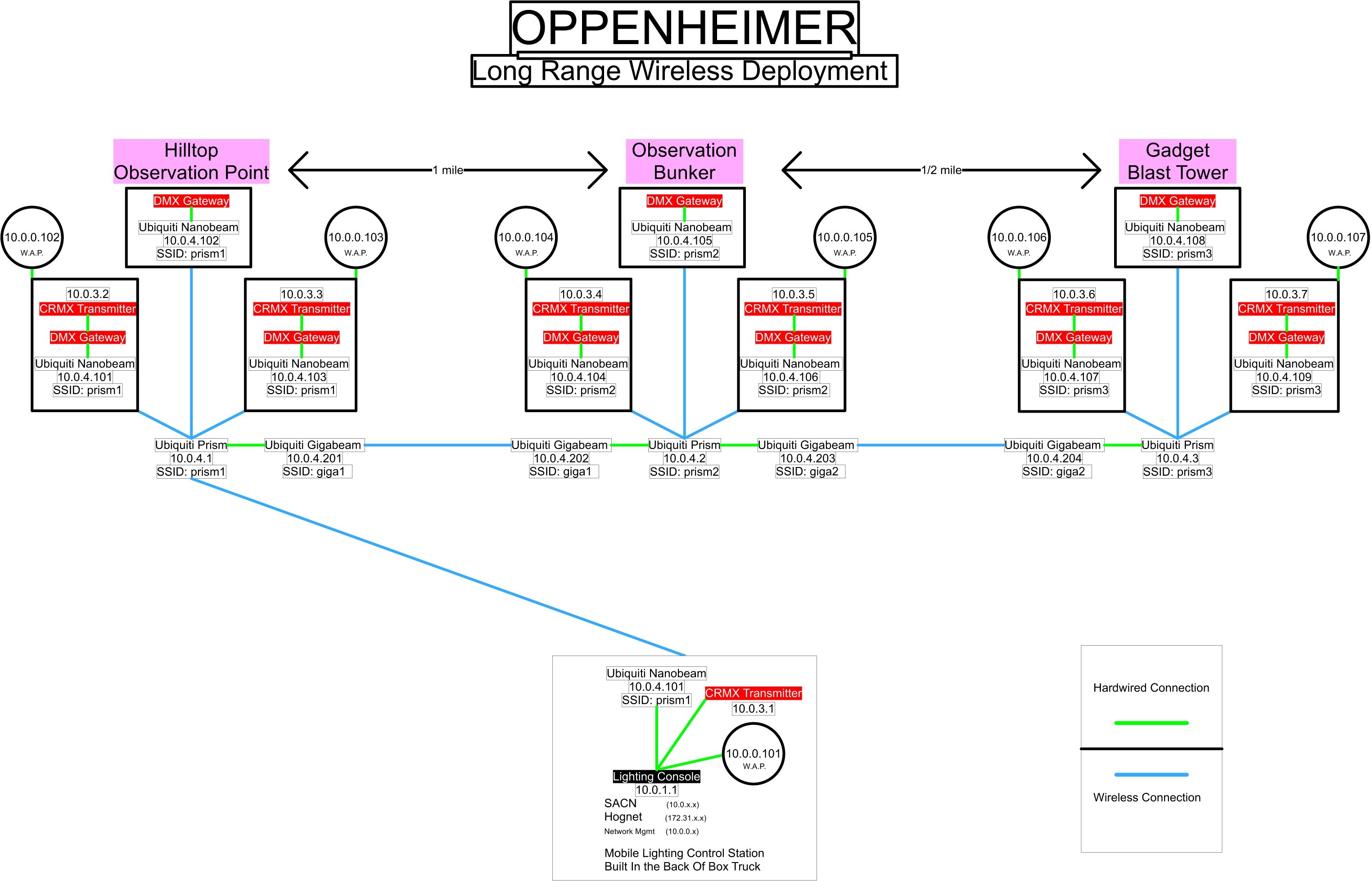

Our main wireless lighting system for Oppenheimer is based on Ubiquiti’s AirMax outdoor radio-frequency protocol, which I used to set up a point-to-multipoint communications network. Try to think of it as a bicycle wheel with a hub and spokes.

A good use-case to study would be the three exterior locations comprising the Trinity test site in New Mexico. There were three Ubiquiti AirMax Rocket Prism 5AC hubs at each set location: the Observation Bunker, the Hilltop Observation Point and the Gadget Blast Tower.

The Prism serves as the point-to-multipoint host; it has ports for connecting coaxial cables, allowing you to attach various types of antennae. They receive lighting data from my lighting console and send it along wireless spokes to “satellites” containing NanoBeam WiFi bridges. Though the NanoBeams can be configured to be hosts, we use them solely as clients, hard-wired to a DMX gateway and a short-range CRMX transmitter, which are needed to talk to the lights.

The three Prisms are wirelessly connected to each other by GigaBeam radio antennae. The GigaBeams act as point-to-point connectors, essentially serving as an invisible, long-range cable. They are indispensable for the whole system to function seamlessly.

Satellites

If we have both prep time and rigging time, we’ll deploy our satellites in compact, four-rack road cases in areas where there’s already an accessible power source. On a single-room set on a soundstage, for example, I’d position one at each of the room’s four corners just outside the set. I designed the cases with extendable rods for mounting the NanoBeams, providing flexibility when placing them on locations.

Hand-truck satellites come into play if, on the day of shooting, we need to quickly get data to an area where it’s not yet available. The hand trucks carry the same equipment as the road cases — a NanoBeam, a DMX gateway and a CRMX transmitter — but also include a battery for added mobility.

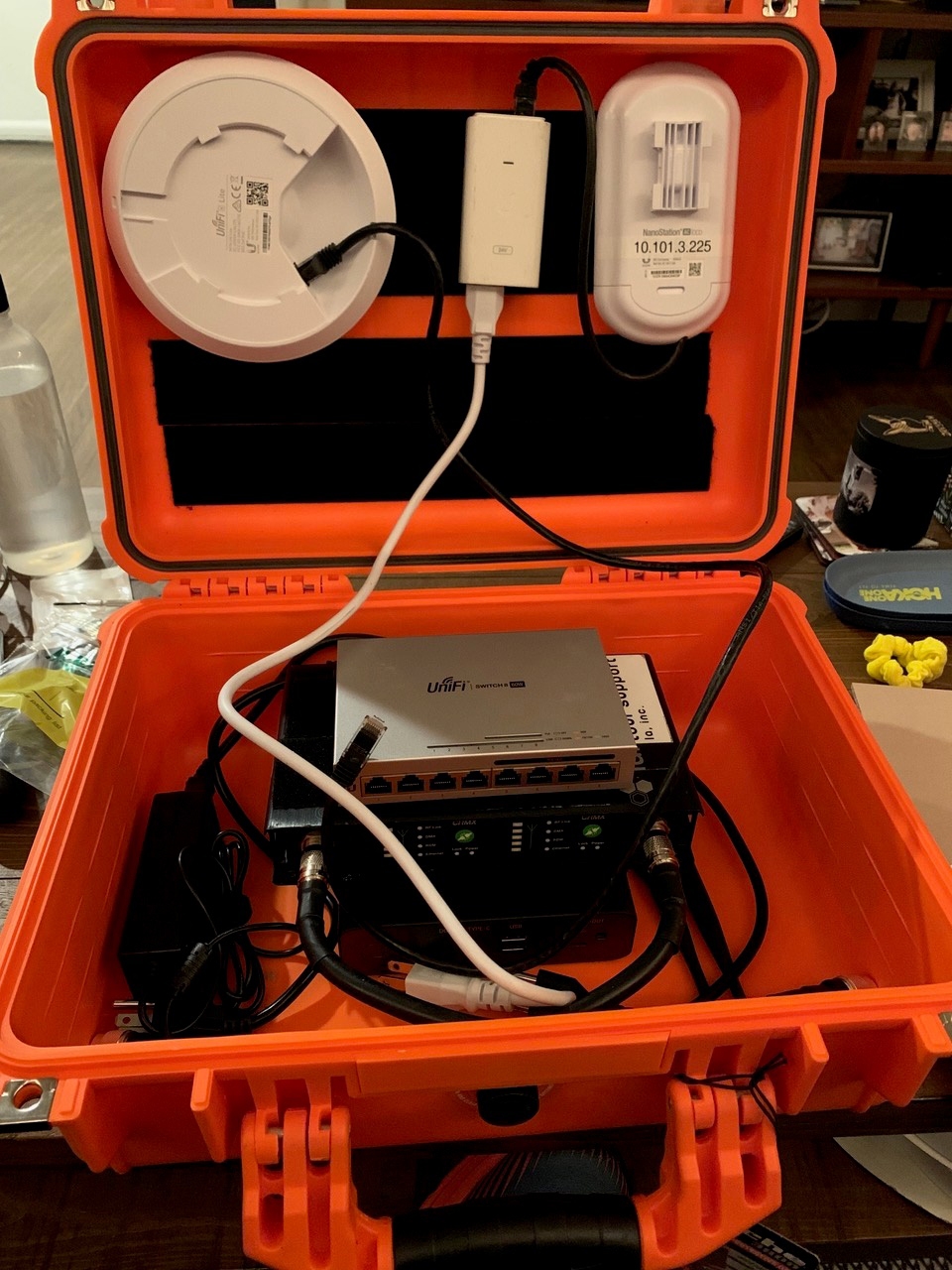

For some of the first shooting days on Oppenheimer, when we had neither a rigging crew nor any prep time for the art gallery and classroom sets at UCLA, lighting tech Ramin Shakibaei would discreetly place Pelican cases containing a NanoStation Loco, a small battery and a network-capable CRMX transmitter in the room, immediately giving us the capability to control any light there. The Pelican cases don’t contain a DMX gateway; however, many of the CRMX transmitters have a gateway integrated within the transmitter itself. Some require a five-pin DMX cable, whereas others can be fed a network cable.

Having the gateway is useful because not all lights are wireless; sometimes you need to run a DMX cable, especially in complex rigs. We can wirelessly broadcast to a ground-based receiver and then feed a DMX cable into the rig. So, the wireless network isn’t a replacement for CRMX, but rather a way to amplify its capabilities, using TCP/IP networking to carry up to 65,000 DMX universes on a single network cable. At maximum, we’ve transmitted 17 universes of patched light, although we had 24 mapped out on the console. With a standard DMX connection, we could only control a fraction of that.

The Console

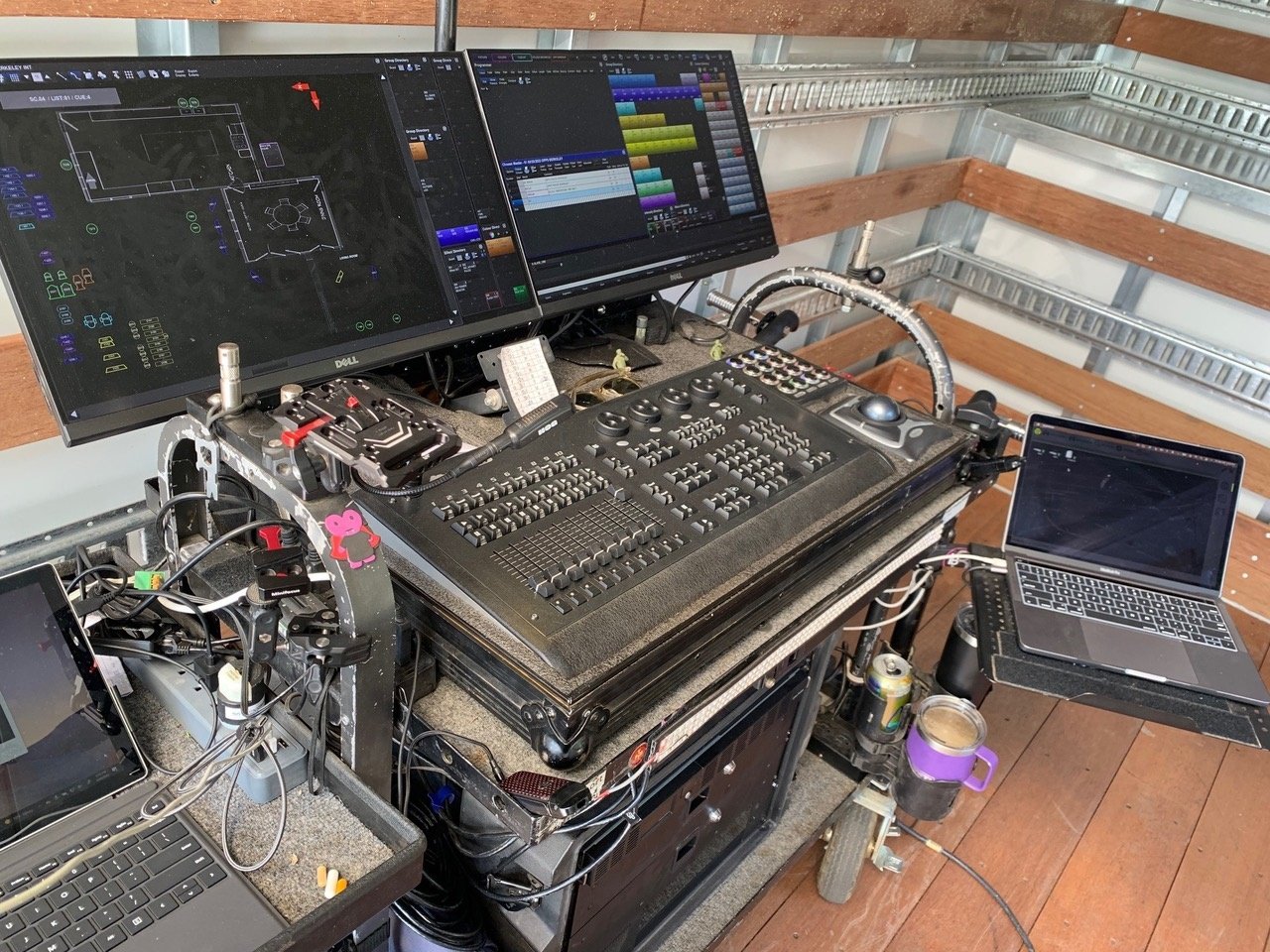

My lighting console is another NanoBeam-equipped satellite. I work off an ETC Hog 4 HPU — a specialized Linux-based PC connected to two 24" Dell touchscreens and housed in a two-slot rack-mount box.

Instead of a bulky control surface, I use a 10-channel Hoglet 4 and an array of customized Midi Fighters, which enable me to assign macros — multi-step commands condensed into a single arcade-style button. This provides an even smaller, battery-powered, wirelessly connected, secondary master-control point.

Two network adapters provide a second IP to our wireless access points, allowing me to step away from the console and maintain channel control through OSC — Open Sound Control, an open-source device protocol — on an iPhone or iPad. We employ VNC (Virtual Network Control) on an iPad for situations where OSC falls short, like updating maps for light placements, or for otherwise directly accessing the console’s software. We don’t use it often, but if I need to identify a specific light in a complex set, VNC is invaluable.

Networking

Each satellite also contains a smart-managed Ethernet switch, which is an essential part of the networking setup. It has two primary purposes: to scale up the network and to segregate it.

In an unmanaged Ethernet switch, each port carries identical data. But with a managed switch, I can determine the output of each port. At the lighting console, I’m running SACN (Streaming Architecture for Control Networks), HogNet and a management network. SACN is the lighting data; HogNet is the console language; and the management network allows me to access the configuration settings for my network devices, like if I need to change the SSID of my NanoBeam to log onto a different Prism.

Each of the three Prisms has its own SSID: Prism 1, Prism 2 and Prism 3. By changing the SSID on my lighting console’s NanoBeam to match that of any Prism, I can switch my connection to that hub, which then utilizes its GigaBeam to communicate wirelessly to a GigaBeam on the next set, even when it’s not visible to me.

This offers great flexibility, as I don’t have to worry about line-of-sight issues, even over a vast area, which was roughly 3 square miles in the case of Oppenheimer. I can arrive at a new location, modify the SSID — often even while in transit — and maintain those crucial long-range connections between sets.

Challenges

I put the first system together in my own garage, but during show preps, we set up at the rental houses where the equipment is stored. For Oppenheimer, it took us two days just to get everything to work as one cohesive system.

The real challenge of this system lies in having everything preconfigured for specific use cases. The most difficult aspect is understanding all the IP addresses and SSIDs in a native sense, so that you can troubleshoot and adapt quickly on the day. Time is of the essence when you’re a console programmer, as there are high demands from the gaffer and the director of photography. They don’t want to hear that I’m still configuring wireless settings, so mastering this technology through hands-on experience is what makes it really functional.

The role of rental houses is critical here: They need to keep their equipment up to date in terms of firmware and operating systems. This industry is rapidly evolving, especially in the realm of smart lighting and networking. Every day we see new lights, protocols and firmware updates.

The Future

Looking ahead, the industry is gravitating toward making all devices network-compatible, essentially phasing out the need for DMX. This transition is already underway; a lot of new lighting equipment has network connectivity and can connect to a TCP/IP network over SACN, so the lighting console can directly interface with the lights using their IP addresses.

I’m lucky enough to work with one cinematographer most of the time, so I usually know what lights will be used and can plan accordingly. Our system has become quite robust, as we typically use the same package, but as the technology advances, being well prepared and up to date is crucial for staying on schedule and ensuring a smooth shoot.