Ashe ’68: Triumph in 360

Cinematographer Eve M. Cohen employs a cinema camera to capture 360-degree virtual reality for this unique short.

Images courtesy of Eve M. Cohen, RexPix Media and Sundance Institute.

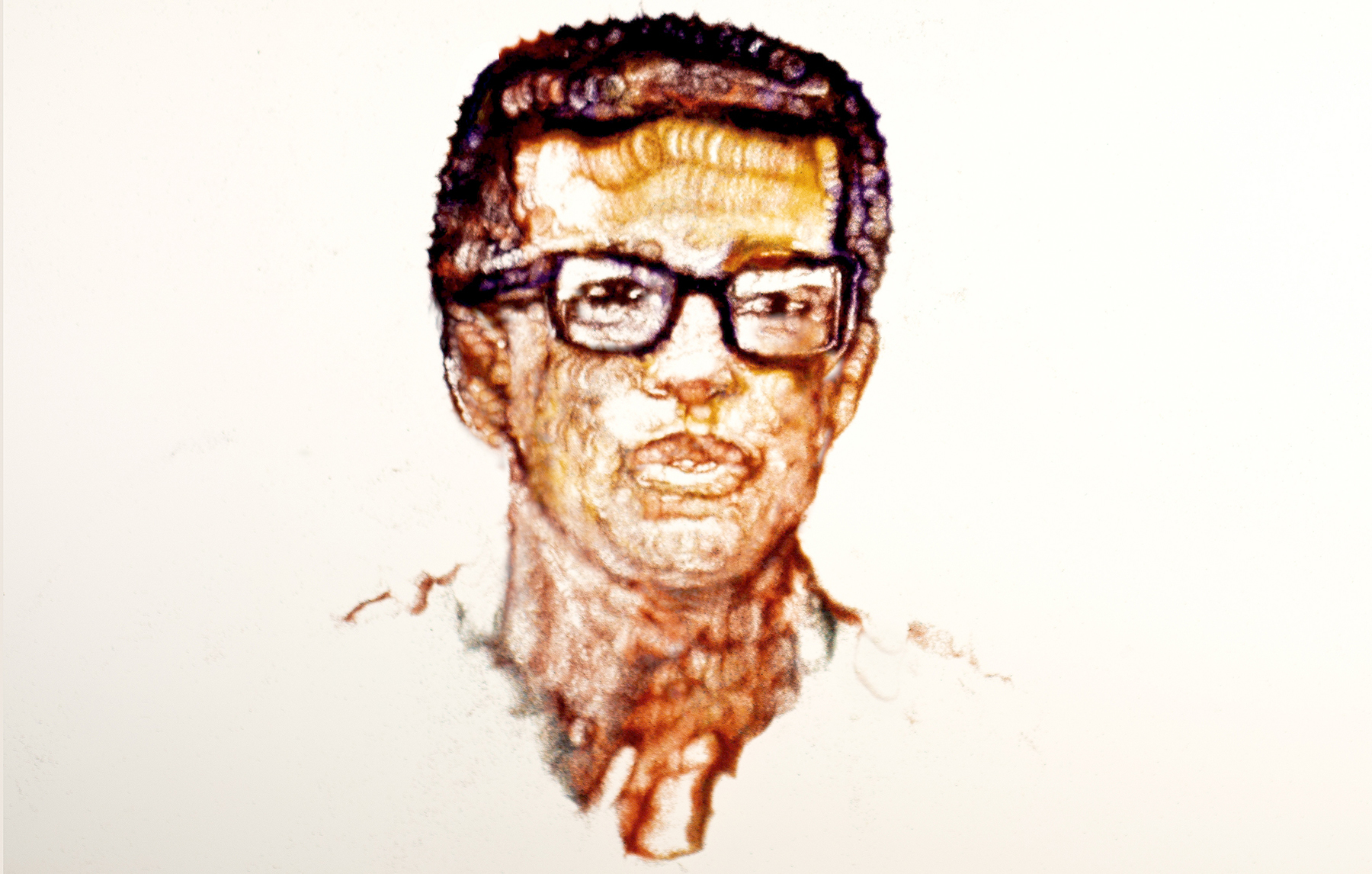

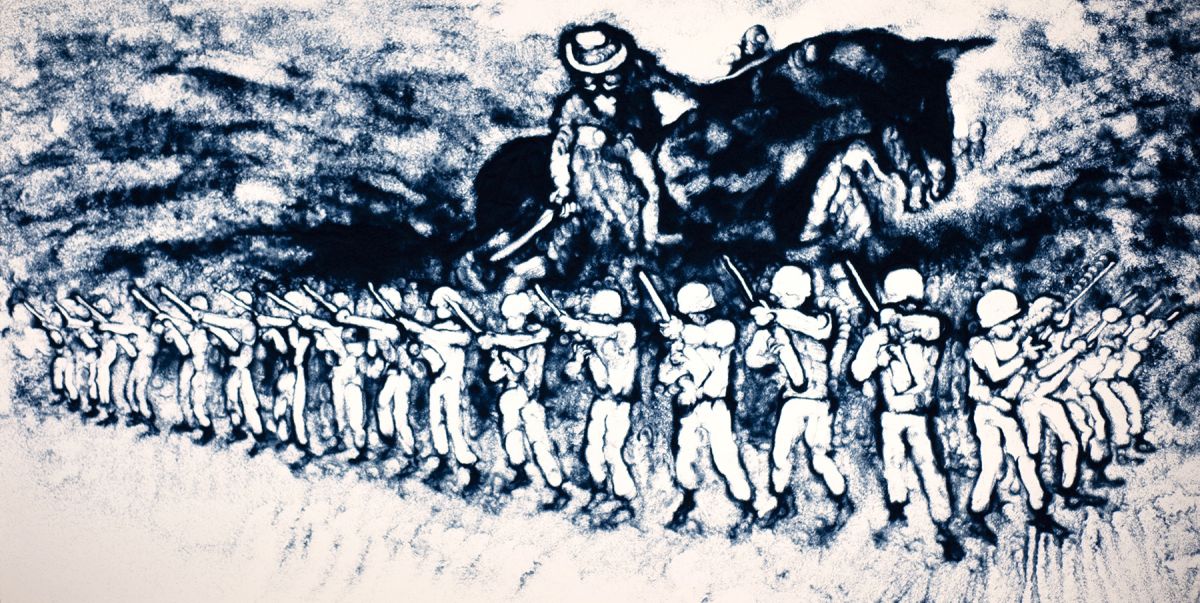

Arthur Ashe won the men’s singles title at the 1968 U.S. Open in the midst of his ongoing, inescapable confrontation with deeply institutionalized racial inequality. Ashe ’68, a 360-degree virtual-reality short, mixes scripted live action, stop-motion sand-art animation, and archival footage to transport viewers into the mindset of the trailblazing African-American tennis player who became a champion for human rights. The production screened at the 2019 Sundance Film Festivalas part of the New Frontier VR Cinema lineup. Cinematographer Eve M. Cohen, who photographed the project’s live-action segments, took some time with AC to discuss her approach to capturing the immersive experience.

American Cinematographer: How did you initially become involved with virtual reality?

Eve M. Cohen: My entry into virtual reality and immersive 360-degree filming started with a film called The Visitor — which was in conjunction with Wevr, a VR production company based in Venice [California]. I was brought onto the project by director James Kaelan, whom I have previously collaborated with on a number of non-VR projects. At the time, very few 360-capable cameras existed, and Wevr was building a proprietary system out of an array of GoPros.

We worked directly with one of the engineers who was producing the rig — discussing lens overlap, stitch lines and angles, and elements to plate out. We needed the camera to perform, but the biggest challenge, and the reason James and I were brought on, was an experiment in storytelling. How do you tell a story inside of a sphere, as opposed to inside of a rectangle? How do you break out of the frame and immerse the viewer inside of the film’s world? I had to peel back layers of what I had learned from traditional cinematography and filmmaking, and get down to basic storytelling and start all over. It was a huge learning curve.

James and I spent weeks developing the language we were going to use around 360 storytelling. Once we got a handle on it, I began reintroducing traditional cinematography elements and tools that I could use to shape the story. Filming with a unibody camera array presents a multitude of challenges — it can see everything at once and there’s nowhere to hide. And when this camera array is created from mid-2015 action-cam capture technology and lenses, these limitations add another level of difficulty.

The other side of the spectrum is the Ashe ’68 project, where I was able to have as much control as I could get from a cinematography standpoint, to deliver the image to the inside of the sphere. It’s cool to think back on my career in VR — starting with custom-built camera arrays where I had very limited control, all the way to a cinema nodal setup that not only required all my skills as a DP, but offered the ultimate creative precision for 360.

![A press scrum grills Ashe in the locker room. “There were group screenings [of Ashe ’68 at the Sundance Film Festival],” Cohen says, “with a large number of headsets in sync in a single room as part of the VR cinema series. It wasn’t shown to viewers one at a time — and I think communally is the best way to view immersive film, because it generates a conversation, the same way that traditional films are ideally viewed in a theater. That’s where the filmmakers want you to see them. In immersive filmmaking, this is the closest we have to a version of cinema.”](/imager/uploads/73578/Ashe-68-04-AA-VR-FRAME-GRAB-Locker-Room_6c0c164bd2b597ee32b68b8b5755bd2e.jpg)

Tell us about the VR production house you worked with on Ashe ’68.

The Ashe ’68 production team [comprised] Custom Reality Services out of Milwaukee. Ashe ’68 was the first project we worked on together, and I have since done two others with them, but not with the same kind of camera setup. Ashe ’68 is unique because it incorporates many different elements of storytelling inside a 360-degree environment — multimedia, with archival footage, sand-art animation and live action.

How did CRS find you?

They had seen a 360 VR project I did with Wevr called Hard World for Small Things, and reached out. I had an initial conversation with co-directors Brad Lichtenstein and Jeff Fitzsimmons. Jeff’s more the technical side of the team, and later he confessed to me, ‘I was so happy to find a DP that also understood VR — a VR DP! I didn’t know that the position even existed until we found you.’ I think early on, many production teams aren’t aware of the benefit a cinematographer can bring to 360 filmmaking.

We treated this as a traditional film shoot — we had a script, and we broke it down, figured out locations, scouted, and had our VFX team from Legend 3D in place. We knew which elements were going to be shot in a certain way to incorporate the sand-art animation, and then it was up to me to find the equipment that was going to be able to handle these creative requirements. When it comes to 360 gear and research, there isn’t much to pull from besides my previous projects — none of which had been done nodally, so I took a couple of weeks for camera and lens testing at various rental houses, [with] many combinations being first-time-use cases.

Where was Ashe ’68 shot, and who supplied the production gear?

We filmed on location at the West Side Tennis Club in Forest Hills, New York, where the actual U.S. Open was played in 1968. I worked with AbelCine in New York and Radiant Images in Los Angeles. At the time, there was a very new lens being used for 360 VR — the Entaniya Hal 250 4.3mm fisheye. I think at the time there were just three of them in the United States, and Radiant Images initially had all three.

We ended up renting one lens from Radiant, but I wanted a backup, so we got another from AbelCine — who also supplied the cameras — just in time for our shoot. Not every rental house understands the intricacies of virtual-reality camera builds, so it’s easier to go to one that can provide some of these more specific elements.

[While on a scout, the directors, the VFX team and I] unanimously agreed that we wanted to avoid the ‘pain points’ of traditional unibody [VR] cameras, and [that we would] shoot [nodally with a cinema] camera. This decision gave us the ability to record enough resolution and bit-depth for VFX to incorporate the sand-art animation into the live-action footage.

We shot the pivotal match-point tennis scene at the West Side Tennis Club on a green-grass court. We had to wrap the whole court in bluescreen so they could replace the stadium with the sand-art animation. The directors also wanted to film the scene overcranked at 120 frames per second in order to accommodate the slow-motion [that appears] in the final piece. However, I still needed to be able to film virtual reality in a monoscopic sphere without cropping in on the sensor and without limiting the field of view, at 120 frames in at least 4K.

Almost every [available] 4K camera cropped in on the sensor at 120 frames per second, except for one combination I was able to find, which was the Canon [EOS] C700 with a CDX-36150 Codex Digital Raw Recorder. As an added bonus, [the C700 had] internal ND filters. That [camera rig] allowed me to shoot 4K at 120 frames per second to the Codex Capture Drives without cropping in on the sensor, and have nearly 100 percent of the usable image that passed through the 250 Entaniya lens fit inside the sensor range.

[One of the reasons] we couldn’t make a cropped sensor work [is because we wanted the camera to] be as close to this match point, and as inside-the-action, as possible, [and] the actor playing Arthur — a wonderful tennis professional, Chris Eubanks — [is] 6-foot-7. We weren’t framing inside a rectangle; we needed the entire image circle of the lens, from top to bottom, to ensure that we could keep the actor inside the sphere.

Who were your main collaborators?

The VR team [included] camera operator Andrew Gisch and DIT Ben Schwartz. Gary Wilkins was gaffer and Andrew “Tank” Rivara was key grip.

How long was the production period?

We had about two weeks of testing and prep, and then filmed for two days. We went back later and shot the [DJI] M600 drone elements, which were not [captured] on the Canon C700, but on a separate unibody, multi-lens camera — the Z Cam S1 Pro. Complicated VR shots require a lot of rehearsal time, and [our prep period] allowed Gary and Tank to prelight each location before we arrived. We discussed exposure and ratio for each space, and they executed superbly. We had a lot of wonderful moving parts that all had to fall into place in a fast two days.

How do you approach lighting for VR?

For the exterior scene on the tennis court, it was just planning for time of day. We had everything ready to go, and then waited for the sun to be in a specific location so that the actor would not cast shadows over the camera and lens. The main interior scene that we filmed nodally with the C700 was inside the locker room. Because we were filming each hemisphere one at a time, I was able to light without having to hide everything. For that scene, we used two Arri SkyPanel S60s — one deep in the background and one high up behind camera — and one S30 tucked next to camera. The overhead fluorescent T12 fixtures were replaced with Q-LED Quasar [Science] tubes, and we hid an additional 4-foot Quasar overhead behind the clothing rack.

Our on-set VFX supervisor, Mike Hopkinson from Legend, was there every step of the way. There were two window elements in the locker room that we shot bracketed plates of in order to correct the exposure without losing the light from the window for the shot — but for the most part, we lit from above and behind the camera. Because we were filming 180 degrees and then rotating the camera around and filming the other 180 degrees, we could light from each side.

So you were shooting half of a 360-degree sphere at a time?

Right — the C700 was on a nodal head for the tennis scene. In the locker room, the C700 had to be rigged from overhead. Andrew and Ben measured the nodal point in the lens around which to rotate; fortunately, the image-circle overlap allowed for some flexibility in this instance. The Entaniya lens covers 250 degrees for the two static scenes, but we only needed about 180 to 200 degrees of that. [Then] we flipped the camera around on the nodal point and filmed in the opposite direction.

The traveling dolly shot in the hallway of the clubhouse, however, was not filmed nodally, but rather [captured] with the unibody Z Cam S1 Pro, rigged onto a Mantis 360 stabilized remote-controlled dolly. The S1 Pro has four fixed lenses that cover the 360 sphere, so for this scene we all had to hide. There was quite a bit of choreography to this moving shot, and intricate VFX cleanup — not only painting out the Mantis itself [and using a plate for the ceiling, both of which were performed by Legend 3D], but when Ashe vanishes into bright light as he approaches the end of the hallway.

We built in the feel of this lighting effect with a bounced Arri M40 through an 8-by-8 Light Grid; flares are distracting in 360 filming since they don’t move when the viewer moves their head, so most lighting is soft, bounced or source. The overhead CFL fixtures were replaced with tungsten 60-watt soft white, and both the stairwells and the side room were shaped with additional M18s. We were able to capture everything we needed in a single take.

How do you protect for action crossing a stitch line?

The reason we chose the Entaniya lens was to avoid crucial action crossing any stitch lines. With this one lens we could capture the entire tennis match and control the action to be inside the safe zone of the lens circle. In a nodal setup, unlike multicam or unibody setups, the actors have to repeat the scene for each nodal camera move, or ‘slice/section’ of the sphere [that’s] filmed, so we made sure that nothing was happening in those spaces where the stitch lines would be. Everything else was choreographed onto one side or the other.

How did you match the re-creation to the original tennis match?

We had the original footage from the match point on a loop, and because our actors — Chris Eubanks and Alex Lacroix — are professional tennis players, they were able to re-create the match point move-by-move. They weren’t just playing tennis, they were acting — and they’re so good that they could hit the ball in the same spot every time.

We did a lot of takes on that scene, and there were a couple where the ball came very close to the camera, but the point was to get it as close to the camera as you could, and have Ashe return the serve and win with you right there as the viewer. It scared me a couple of times [laughs], but it all worked out. I knew the take they were going to use when we shot it — I jumped back because it was so close to the camera. But that’s the other thing you can get away with on the 250 lens — the action can happen very close to it without any focus problems or distance distortion inside the headset when viewing. And because we were filming monoscopic and not stereo, we didn’t have to worry about parallax.

Were you monitoring the fisheye output directly, or seeing it unsqueezed?

I monitored the fisheye output. We could quickly go through a post process and pull out the hemi-spherical version for a headset — but we’d done tests beforehand, so we knew what we were getting on the day. In the hallway shot, [because] there was no way to play back ZCam, we were able to rig a consumer Samsung Gear 360 to the top of the S1 Pro [and use it] as a playback VR camera, which directors Brad and Jeff, and VFX supervisor Mike, used to watch playback when needed. It wasn’t exactly the same perspective as the Z Cam, but it was close enough that they could review the actors. For the scene in the locker room, Brad was sitting almost in a locker, right underneath the camera, so he didn’t need to watch playback — and I monitored the image with Jeff.

Ashe ’68 have a lengthy postproduction?

It was a very long postproduction period. Legend handled all the post for the stitching and VFX, and Matthew Kemper was the lead artist. Aaron Elias was the colorist, who worked in [SGO] Mistika for the color grade and conform. The sand-art animation is a painstaking process, where every single frame was physically ‘painted’ [with] sand on a 2-foot-by-5-foot light table. And if you think about the way that it has to be done, warped, and then de-warped to fit inside of the sphere — it’s very time-consuming.

The artists had to build the entire way that they were going to photograph the sand art, and incorporate it into the live-action that we had shot. They created and photographed over 4,700 individual frames. The sand-art animators are Masha Vasilkovsky and Ruah Edelstein. They’re both faculty at CalArts. The creative concept behind the sand art was to make it feel more tactile, dreamlike, and reminiscent of memory.

Was Ashe ’68 intended for theatrical release or as a venue exhibit?

It had a private preview exhibition at the 2018 U.S. Open, 50 years after Ashe’s win. There was a VR installation space as part of a two- or three-room gallery. The plan was to go on to festivals, but hopefully also have it be something that ends up in different galleries — as a part of history and a way of telling a timely story. CRS producer Maddy Power and the directing team are working on installations in some museums and are touring to sites in partnership with various tennis organizations. It’s also ancillary to a feature documentary being created about Arthur Ashe, directed by Rex Miller and produced by Beth Hubbard.

Do you have any other immersive projects on the horizon?

I collaborated on an immersive project for International Women’s Day, called Courage to Question, which premiered March 2019 at the U.N. Headquarters. It was produced by Google in conjunction with U.N. Women. It was in an exhibit at the U.N. and is now available to watch on headsets.

How would you compare the completed version of Ashe ’68 to your initial vision when you started working on it?

I was very excited for this story and the challenges it presented at the onset, and I think the final is just incredible. I had no idea what the sand-art animation would look like, and it’s much more intense than I expected. When you watch it inside a headset, it’s very experiential — very powerful. I thought it would feel more like something I had seen before, but it’s unlike anything I have watched.

Do you feel that the immersive format of the project communicated the intent of the story more strongly than a ‘traditional’ presentation might have?

The directors wanted to put the viewer inside the headspace of Arthur Ashe at that moment in time — that specific moment on the court. The best way to do that is to set the viewer into an immersive experience, where all of the rest of your senses are cut off from the outside world. You’re right there as Ashe is being attacked by the reporters in the locker room, and you’re walking down the hall of this tennis club where everyone’s staring at him — and then you’re there with him winning the U.S. Open right on that court. You’re the closest you can be to experiencing a particular moment in time as a very specific person.

Do you have any advice for cinematographers tackling their first immersive project?

A lot of people I’ve talked to are nervous to try out virtual reality because it’s such a different set of tools, but I think that most of the skills that you already have as a cinematographer apply in an immersive space. It’s just thinking of the world around you as a sphere instead of a rectangle. We don’t have to frame it, and you can use the whole space. In that way, it’s akin to immersive [live] theater and the experience you have walking through a theatrical performance and participating. It’s a shift of the skills you have as a DP — translating them into everything around you, as opposed to just framing.

You can see the completed project here:

AC also spoke with Cohen during the Sundance Film Festival as part of a panel discussion held at the Canon Creative Studio on Main Street. complete coverage on the panel — “DP First: Women Who Shoot” — complete with full video, can be found here.