Total Immersion for Avatar: The Way of Water

Russell Carpenter, ASC joins James Cameron for his return to the Na’vi realm of Pandora.

By Iain Marcks and Noah Kadner

Unit photography by Mark Fellman. All images courtesy of 20th Century Studios.

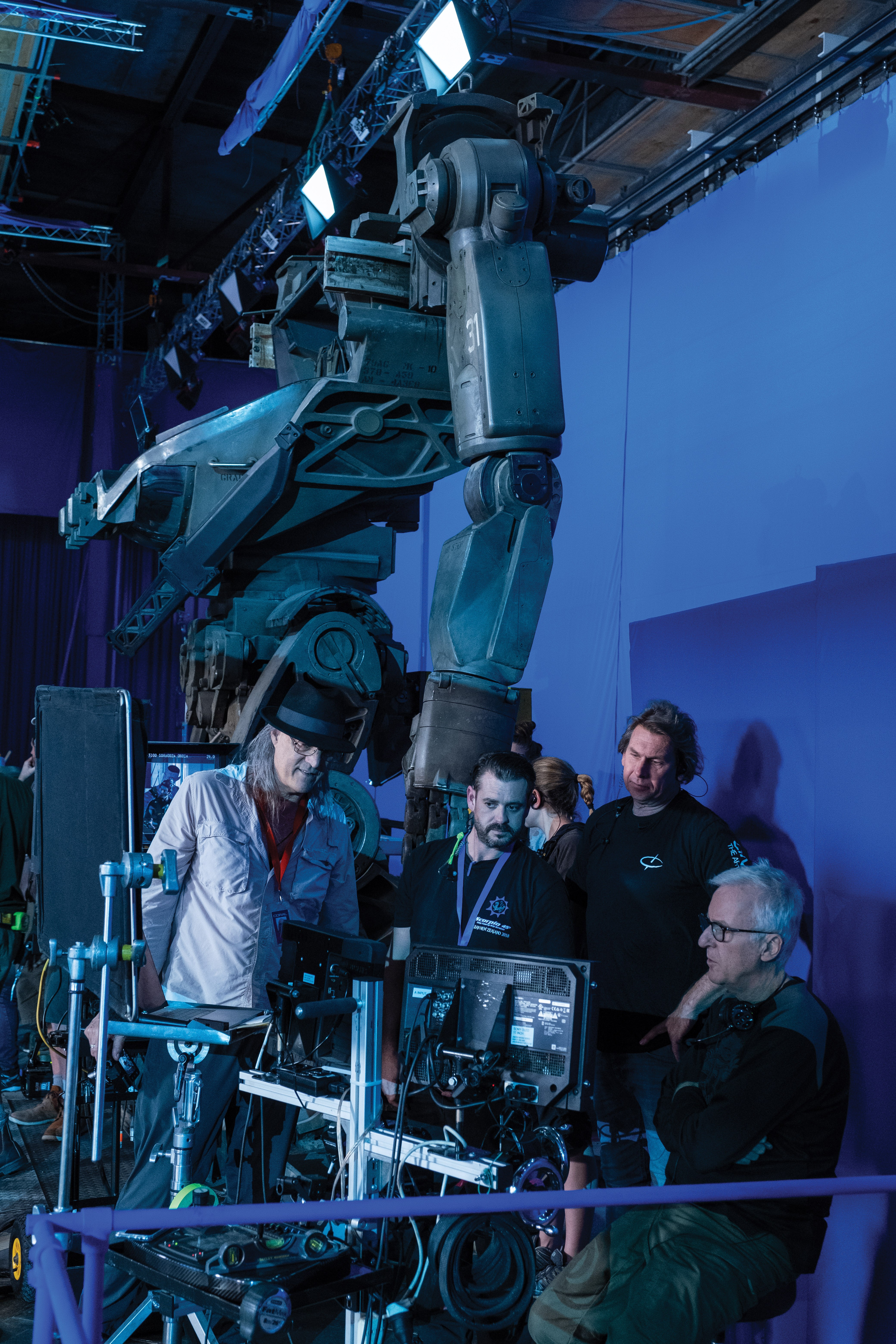

The success of Avatar: The Way of Water depended entirely upon close collaboration between the experts in the production’s key departments and other top practitioners in their fields. “I think of myself as creating the grand provocation and getting a bunch of smart people around me to figure it out,” says director James Cameron.

A primary participant in this effort was Russell Carpenter, ASC, whose work with Cameron began almost three decades ago on the blockbuster action film True Lies (AC Sept. ’94). Their creative partnership continued with the 3D Universal Studios theme-park attraction Terminator: Battle Across Time (AC July ’96) and then Titanic (AC Dec. ’97), which brought both filmmakers Academy Awards.

Seeking a fresh perspective for his next feature, the original Avatar, Cameron turned to Mauro Fiore, ASC (AC Jan. ’10). “I felt Russell and I already knew each other so well that I wasn’t going to learn anything new, so I went with a different director of photography,” Cameron notes with typical candor. “Mauro did a great job, but it wasn’t the same as working with Russell. I didn’t think twice about offering Russell the sequel, and we fell right back into our old way of working, which is very collaborative.

Set in 2167, 13 years after the events of the first film, The Way of Water continues the story of Jake (Sam Worthington) and Neytiri (Zoe Saldaña), revealing the trouble that follows their Na’vi family when the ruthless Resources Development Administration (RDA) mining company returns to the moon of Pandora with total domination on its agenda.

“The story is the main thing,” Cameron says, “and we’ve built our whole aesthetic around it.” Photographed characters interact with CG characters and environments to a far greater extent than they did in the first film, and numerous scenes are set below the surface of Pandora’s vast oceans.

Carpenter boarded the project in early 2018 and spent a year preparing for physical production while overseeing the virtual lighting of many CGI sequences in Gazebo, Weta FX’s real-time lighting tool developed by Weta visual effects supervisor Dan Cox. “Jim emphasized that the light in each scene on Pandora should display many different color nuances according to each scene’s environment, and never be ‘just neutral,’” the cinematographer says. “When possible, the light should be a living and breathing expression of the life of Pandora. In my mind, this took me back to the landscapes of Hudson River School, in which the natural world and its inhabitants co-exist peacefully. The process of lighting these virtual scenes was invaluable, as I was able to determine how we would tackle bringing humans into Pandora when we actually began the live-action cinematography months later.

“Even though it had been 25 years since Titanic, there was the sense that Jim and I just picked up where we left off. He is just as ambitious in his vision, and the challenges he throws my way keep me totally involved.”

— Russell Carpenter, ASC

“Even though it had been 25 years since Titanic, there was the sense that Jim and I just picked up where we left off,” Carpenter adds. “He is just as ambitious in his vision, and the challenges he throws my way keep me totally involved.”

Pandora Perspective

Carpenter photographed live-action portions of The Way of Water in native 3D using a new iteration of the Cameron-Pace twin-camera rig. The modular system could be built in different configurations to meet production needs, and each comprised two Sony Venice cameras in their 4K 17:9 imager mode for 2.39:1 and 16:9 deliverables. Cameron notes that whereas the widescreen format is better for 2D exhibition, “3D thrives in 16:9, where you have a bit of the image in your lap, as well as on either side.”

Carpenter used the 2500 ISO set drawing significant power or compromising image quality. The extra exposure also helped offset the two-stop loss from shooting at 48fps through the 3D camera’s beam splitter. In post, motion-grading services were provided by Pixelworks, which used its TrueCut Motion platform to emulate 24fps and reduce motion-blur artifacts. “That was good for Weta, as it gave them clearer images to work with,” says Carpenter.

To Cameron, stereoscopic imaging is just one component of a large, complicated production — so complicated that “there’s literally no single human who understands every aspect of it,” he says.

Water Work

Performance-capture work kicked off in 2017 on a custom underwater mocap volume built on Stage 18 at Manhattan Beach Studios in Los Angeles; the space measured roughly 42'x85'x32' and featured a 15'x15'x15' deep well. The primary challenge was capturing convincing underwater action. Lightstorm Entertainment virtual production supervisor Ryan Champney, who led the R&D effort in coordination with Cox, says, “We started with a bunch of dry-for-wet tests with traditional wire rigs, but Jim insisted we build a tank for realistic motion.”

Carpenter notes that the tank design “was quite flexible — it could represent deep water, shallow water, still water, moving water, etc. It had an enormous turbine that moved the water around in a racetrack fashion. They even built rapids for our actors and mocap troupe to go down at a pretty decent rate of speed.”

“We had to figure out what would break,” says Champney. “For example, a traditional mocap camera uses infrared [emitters], and that energy gets absorbed in water very quickly. So, we found a wavelength near ultraviolet that would travel efficiently through the water and still be sensitive to the camera sensor. We also had a machinist construct waterproof housings for the mocap cameras.”

Though the action captured in the underwater volume was virtual, the actors needed sets with which to interact. “A capture set consists of only that which the actors physically touch and move through, pull themselves by, hold onto — all those sorts of things,” explains Cameron. “The question was, ‘How do we stay on schedule if we have to build sets for a set change overnight or even during the same day?’ We built a platform covering the entire bottom of the tank and put it on cables. We could bring it to the surface and bolt on pre-made set pieces. We could change a complete set from one scene to another in a couple of hours, or over lunch.”

Lighting in the underwater volume needed to be even and flat to support the mocap cameras. The crew placed 60 Arri Skypanel S60-Cs over the water to create a high ambient-light level, but first had to overcome reflections on the water’s surface. “[The reflections] were causing false data registrations and noise in the mocap system,” Champney notes. “So, we borrowed an idea Jim had on The Abysss [to block sunlight from illuminating a deep-water tank] and covered the water’s surface with small plastic beads. They were hollow, with a 2mm-thick shell, and almost opaque. You could see light through them from above, but only if [the beads were] strongly backlit. Safety-wise, everyone working in the tank could still easily breach the water’s surface, and the beads gave nice, even lighting to the bottom and eliminated the reflections.”

The team also captured extensive dry-for-dry performance work in a traditionally constructed volume at Manhattan Beach Studios. “We uncoupled the virtual-camera process from performance capture,” says Cameron. “I use my virtual camera the way a director would use a viewfinder: I walk around with it to work out the general staging, and we rehearse. It gives me a sense of where all the characters will be in the environment, but once we’ve got rough marks down, I set [the virtual camera] aside; I give it to someone else to create a kind of wide-field record of what happened because now I’m more interested in [the actors’] performances.”

For reference, Cameron also deployed an array of 12 to 16 Sony PXWX320 XDCAM ½" 3-CMOS cameras with 5.8-93mm Sony kit lenses and PXW-Z450 4K HDR 2/3" Exmor R CMOS cameras with Fujinon 8-176mm (UA22x8BERD) lenses. “One of the biggest roadblocks we hit in the first movie was creating an editing process from scratch,” the director recalls. “Having video reference is absolutely critical because that’s what you edit with. The cameras are usually handheld because the operators must be quick and flexible. I had a 16-view display matrix on set to review all the angles and ensure that the actors’ performances were correctly photographed.”

Virtual Integration

In January 2019, Carpenter and his team departed for New Zealand, where their task was to blend the virtual world with live-action photography. Camera movement and lens size would have to match exactly. “Every morning on set, we had to bring the live-action [3D] camera into line with the position of the virtual camera,” says the cinematographer. “It was a painstaking process. Not only did our lighting have to match the characteristics of the virtual shots, but it had to hit the actor in exactly the right place with the proper timing.”

One of the first orders of business for Weta FX was developing a new Simulcam, the virtual-camera system devised for the original Avatar. The Simulcam can make a real-time composite of virtual and practical elements and feed that into the production camera viewfinder so the operator sees the whole world composited in near-real time. All the components are registered and aligned in 3D space so proper scale of practical and virtual are maintained for the camera operator (and those watching the production monitor), which allows for real-time framing of practical and virtual simultaneously.

“Jim directed and operated through the lens on the 3D handheld rig,” Carpenter explains. “Cameron McLean operated our Steadicam shots and crane shots, and Richard Bluck [NZCS], when not attending to his 2nd-unit DP responsibilities, also sometimes operated. The New Zealand camera team, headed by 1st AC Brenden Holster, was exceptional; even though they faced daily myriad challenges they always kept things flowing smoothly. The New Zealand crew was excellent, and that includes every department.”

Weta FX motion-capture supervisor Dejan Momcilovic led a team tasked with updating the Simulcam technology for The Way of Water. “The biggest challenge was integrating with the production and not slowing everything down,” Momcilovic says. “We had scenarios where the foreground is CG, with live action behind it, then more CG, and then more set. It was quite complex. The solution was a combination of machine learning and computer-vision cameras.

“We started lidar-scanning the sets as they were nearing completion and generated a lot of training data for our neural network,” Momcilovic continues. The neural-network system learns the basic geometry of the set from this lidar data and can then predict that geometry for compositing. “We infer an object disparity in one camera, turn it into depth, and then project that back into space and observe it with the hero camera,” he continues. “The computer-vision camera is very fast at acquiring the image, so we’re a frame ahead and ready to composite. Gazebo handled the real-time rendering separately, so the final composited image in the viewfinder has a lag of four to six frames depending on each scene’s complexity.”

“The system could tell where our live-action characters were and then differentiate what was behind and in front of them,” says Carpenter. “It provided a tremendously useful preview of how the virtual characters interacted with the live action.”

Physical Meets Virtual

The Simulcam also helped Carpenter match his lighting to its CG counterpart. “If Weta has a CG character standing next to our live-action character, obviously they have to be in the same light,” says Cameron. “You are driving a stake into the ground, so you have to get it right, and that’s why pre-planning and pulling the lighting process forward into virtual production was so important.”

While observing Cameron’s motion-capture sessions in Los Angeles, Carpenter and gaffer Len Levine noticed that the director could make major changes to the virtual set quickly. “If Jim wanted to move a waterfall or the sun, somebody at a bank of computers could do that, so we wanted to create a live-action environment that allowed him to work in a fluid manner while also being flexible enough to handle scores of different lighting scenarios,” Carpenter says. An extensive use of practicals and building lighting into set pieces that could be quickly assembled were key to achieving this. For the RDA sets, this approach included extensive use of RGBTD ribbon LED practicals, as well as Astera Helios and Titan tubes and automated Vari-Lite VL2600 moving fixtures.

The Simulcam system served different purposes during the virtual and physical phases of production. “The responsibilities of the live-action team were more centered on its overall operation, while the virtual-production ‘brain bar’ was more geared toward performance capture and the virtual camera, though the systems and personnel in both cases had overlapping functions,” says Champney. “Almost all of our software is custom-built. Atlas and Gazebo are Weta’s scene layout/evaluation and rendering engines, respectively, that plug into the host applications of Autodesk Maya and MotionBuilder. Manuka provides a data representation of how light interacts with surfaces.”

Used as a pre-lighting tool, Gazebo is capable of illuminating scenes and providing a preview that’s close to the final rendered quality. It also enables blocking light sources as if working on a real film set. As a result, Weta can light scenes with predictability, and for the project’s army of animators, especially, it is important to see how their work looks with lighting, and Gazebo bridges that gap.

Champney notes that the Manuka renderer was developed by Weta to work in tandem with Gazebo: “It can process huge amounts of data in much less time. Lighting done in Gazebo can be transferred into Manuka and appear almost exactly similar. This is thanks to both software having the same reflectance models that were based on real-world lighting.

“The software feeding data to Manuka consisted of [Lightstorm Entertainment’s] Giant real-time performance capture system and our hardware streaming system, which talks to the various devices — cranes, virtual cameras, 3D rigs, inertial measurement units, etc.”

During prep, to understand and process the scope and feel of each set, Levine combined art department models, construction department models of the stages, previs material, Cameron’s notes from techvis, and Carpenter’s scene notes to create 3D lighting plans in Vectorworks for more than 50 physical sets scheduled for construction at Stone Street Studios and Turner Warehouse in Wellington, as well as the Kumue Studios in Auckland.

RDA Aesthetic

Live-action scenes involving RDA sets were photographed first. “One of the things we carried over from the original film was the conflicting aesthetic between the natural world of Pandora and the artificial, human world of RDA,” Carpenter explains. “Inside the RDA complex, the light is sometimes mundane and utilitarian, but at other times harsh — quite powerful, as well as invasive. When we do see natural light coming through the windows, it’s at a sharp, geometric angle.”

The largest of these sets was the two-story aft well deck of RDA’s behemoth Sea Dragon vessel. Located on the K Stage at Stone Street, it featured two full-sized practical gunboats and two moon pools with submersibles. At one end of the deck was a bay door through which Carpenter could push sunlight. “We put ArriMaxes on a condor to tackle the broad strokes, one with a spot reflector and one with a flat reflector,” Levine elaborates. “We used the incredibly punchy Vari-Lite VL6000 Beam lights for targeted strikes of sunlight, Robe BMFL Blades and VL2600 lights for more finessed beams and accents, and an array of Arri SkyPanel S360-Cs for ambiance.”

Inside the ship, softboxes with SkyPanel S60-Cs in four- and eight-light configurations could be raised and lowered at any angle from a truss. Effects lighting was accomplished with Chroma-Q Studio Force D XT 12s for flashes and 12-light Maxi Brutes for explosions. Lighting programmers Scott Barnes and Elton Hartney James ran the show from an ETC Hog 4-18 console, which is typically used for stadium events and theme-park attractions.

The critical step of matching the color and quality of real and virtual lighting was done largely by eye. Carpenter studied rough edits of scenes that used his virtual lighting, and the Simulcam real-time stereo composite of the camera and CG images presented him with a basic approximation of the final result.

Pandora Ocean

At Kumeu Film Studios, a 75'x100' surface tank was constructed on Stage B for live-action ocean scenes. Weta furnished Carpenter with effects plates for playback on 20'x10' LED video screens around the tank with the goal of creating “in-camera” reflections instead of creating moving water reflections in CGI. Reflections off the surface of the water were achieved by positioning the screens overhead on travelers and cranes. For reflections in the water, a rolling deck on each long side of the tank supported two 10'x20' screens reflected into a 40' mirror partially submerged at a 45-degree angle to make the desired reflections meet the water line instead of having a gap. Carpenter found that the screens worked better for creating reflections than lighting actors, so they were augmented with pixel-mapped Chroma-Q Studio Force II 72 units and “nets” of daisy-chained Astera Titan Tubes tied together with sash cord. Linked to the video-screen playback footage, they provided synchronized, interactive lighting.

When the Auckland shoot wrapped, Levine passed the gaffer’s baton to Dan Riffel, who accompanied the production back to Stone Street for the last segment of shooting.

“I don’t know of any other filmmaker in the world who uses technology this way. We’ve upped the game not just on the spectacle, but on the emotional side of things.”

Height Challenged

The average Na’vi height of 9' posed a unique challenge for the eyelines and compositions needed to place them convincingly in the same frame as human characters. For the original Avatar, the team relied on proxy objects such as tennis balls to guide the actors’ gaze, but Cameron wanted a better method. Devising the solution fell to Avatar veteran and techvis specialist Casey Schatz, head of virtual production at The Third Floor.

“Eyelines have always been a problem for visual effects,” observes Schatz. “No matter how incredibly Weta renders a creature, if the actor looks one way and the monster is somewhere else, the illusion dies. We devised an incredibly quiet, four-axis cable-camera rig carrying a SmallHD monitor and a Bluetooth speaker. It moved around in perfect sync with the original mocap so the human actors could react precisely to where the CG characters would be, spatially.”

Cameron adds, “Once we started down the cable-cam road, we knew we’d have open-top sets and fill them in with CG extensions, which is easy these days. The monitor could swivel if the virtual character’s head turned. We ran the video from the actor’s face rig from the original mocap capture session. The audio of their dialogue was also directional, so it was very intuitive for the actors [performing to it]. Literally every single live-action scene involved CG characters and/or set extensions.”

The cable-camera system proved challenging for techvis because it had to interact with the actors while safely avoiding set pieces, lighting, rigging and other potential obstacles. To address the issue, Schatz visualized each set in Maya using data from a Leica BLK360 laser scanner and then carefully plotted the cable-cam stand-ins’ placement and movement. “We placed the [cable cam’s] four towers relative to the live action along with the cables,” says Schatz. “I could exchange the techvis with the art, camera and rigging departments, who worked in Vectorworks. If they needed to add a light or bring in the Scorpio crane, for example, we’d know if we needed to readjust to avoid hitting something. It became Tetris on top of a Rubik’s Cube to accommodate the camera and lighting and avoid snaring the cables on anything in the set during the moves.

“The Grand Provocation” Continues

After Covid-19 disrupted the shoot in 2020, a reduced crew returned for the final phase of live-action filming in New Zealand. Cameron then began intensive postproduction, coordinating with Weta FX to refine virtual material down to the final pixel. The Way of Water was completed after five years of production, but Cameron continues work on the forthcoming sequels, the next of which is due in 2024.

“I don’t know of any other filmmaker in the world who uses technology this way,” Carpenter marvels. “We’ve upped the game not just on the spectacle, but on the emotional side of things. The heart of Avatar: The Way of Water is about family and what constitutes family. It’s also about belonging, and the pain one can feel if they don’t belong. In the time between the first Avatar and this one, an incredible amount of research and technology went into being able to re-create and transmit the minute emotional clues we give each other. I believe there is more nuance for the audience to detect in each actor’s performance, and that makes our emotional connection to the story more powerful.”

Cameron concludes, “As hard as it is in the moment, you end up with a great sense of satisfaction. I know Russell is proud of his work on this film, as well he should be.

Dimensional Advances

There’s always something interesting about the way James Cameron makes a movie. Early in his career, he learned to maximize his resources while working in the production-design and visual-effects departments on films produced by Roger Corman. The seven features he has directed — from The Terminator (AC April ’85) to Avatar — are all notable for their innovative use of special and visual effects, often involving seamless combinations of traditional and new methods in production and post. No longer limited by resources, Cameron continues to stretch the boundaries of filmmaking technology.

Two innovations on Avatar were virtual cinematography and virtual production, terms coined during the production of the film in 2007-’08 to describe real-time integration of virtual and live-action characters, performance capture within a volume, and the use of a virtual camera. The process was conceived by Rob Legato, ASC, who also served as the film’s visual-effects-pipeline engineer.

A third breakthrough was the development of the twin-lens Cameron-Pace Fusion 3D camera rig, which was used to shoot the live-action material. It was the most sophisticated system of its kind at the time and an evolution of the beam-splitter design employed on Cameron’s 3D documentaries Ghosts of the Abyss (AC July ’03) and Aliens of the Deep (AC March ’05). The Fusion design featured two Sony HDW-F950 CineAlta HD ⅔" sensor blocks and Fujinon zoom lenses — the HA16x6.3BE or the specially-designed HA5x7B-W50 — with remote-controlled interocular distance and convergence. Relatively lightweight (when compared to film-based 3D systems), the setup allowed for a more subtle, versatile approach to stereoscopic capture.

Since 2009, advances in optics and digital imaging have produced compact 35mm-sensor cameras better suited to native 3D production. For The Way of Water, Cameron worked with Sony to integrate the Venice camera’s Rialto extension system into a redesigned Fusion. Patrick Campbell, Lightstorm Entertainment’s director of 3D camera technology, supervised the refit, replacing steel with titanium and fabricating new metal and 3D-printed nylon parts with carbon-fiber reinforcements. “We removed enough weight to add a 1.5-pound, servo-driven, height-pitch-roll adjustment plate for the vertical camera, which allowed remote camera alignment, and we were still able to get the Fusion down to 29.8 pounds [at its lightest],” says Campbell.

Carpenter’s handheld and Steadicam configurations of the Fusion used two Rialtos on 20' tethers. Tripod, crane, jib and underwater operation utilized two Venice bodies in-line front-to-back, with a lens on the front body and a Rialto in the overhead vertical position. Wide-angle underwater photography was managed with an open-mirror housing and watertight Nikonos-mount lenses that protruded from the enclosure.

“The weight on the rig is highly dependent on how it needs to be used for a given shot,” notes 3D systems producer John Brooks, a longtime collaborator of Cameron’s. “The more complex a visual effect is, the more metadata is needed, so more gear gets added to the camera package. The Fujinon [MK18-55mm, MK50-135mm and Premier ZK Cabrio 19-90mm] lenses really made a difference — light zooms with the right focal range and imaging characteristics that satisfied both Jim and Russell.”

Despite Avatar’s global success, native 3D production has struggled to gain traction since its release. Cameron attributes this to subjective industry standards. “We decided to go with a ⅔-inch sensor because the lenses that covered it could be quite small [compared to standard cine zooms],” he remarks. “But a lot of cinematographers wanted to shoot with 35mm sensors, so you go from a 3-pound Fujinon zoom to a 17-pound Panavision zoom — times two — and now you’ve got 34 pounds just in glass before you even account for the weight of the camera. They didn’t realize there was this tradeoff. If you use a 3-pound lens, you can have a 30-pound 3D camera system.”

To Cameron, what constitutes “good 3D” remains essentially unchanged. “You want to be aware of that volumetric space between the lens and the action. That’s where the fun is, right? I think it works best on a subliminal level. You don’t want to linger on it, and you don’t want something constantly in the viewer’s face.”

Evocative Lighting

The quality of light on the moon of Pandora was designed to change according to time of day, with luminance and color performing in opposite directions between dusk and dawn. “In terms of color, your palette of light wavelengths is very narrow at midday and widens as it gets later,” says director James Cameron. “In terms of luminance, your key-to-fill ratio is quite high at midday and lowers as it gets later.”

As Pandora orbits its primary planet, there are two seasons on either side of its spring and fall equinoxes when the planet eclipses the sun for an hour a day. “We can go from a day scene to a night scene in two minutes of story time, which we often use to mystical or suspenseful effect,” Cameron remarks.

Other quick lighting transitions were either programmed into the ETC Hog 4-18 desk during the shooting of a given scene, or the solution simply became a matter of using a solid to block a source. “We did a lot of research into using digital light projectors to simulate the shadows of the flying Ikran, the dragon-like winged creatures the Na’vi fly on,” Carpenter explains, citing one example of this. “We were able to get quite accurate shadow structures but found it a somewhat lengthy process to re-program the speed and size of these shadows in the heat of the moment. Surprisingly, we discovered that a terribly ‘lo-fi’ solution worked best — basically, someone on a lift moving a cardboard cutout rapidly in front of a light could make for a very satisfactory Ikran shadow and also be much more flexible.”

Although most of Pandora was created virtually, many physical sets were built for live-action characters to interact with. “Anywhere a human foot touched the ground was a practical build,” gaffer Dan Riffel reports.

Under the canopy of the Pandoran jungle, daylight tends to be soft and toward the blue side. “Depending on how thick the canopy is, you’ll get these harsh patches of warm sunlight breaking through,” Carpenter says. “For forest shadows, we used a variety of materials — live plant cuttings, of course, but very resourceful electricians actually fashioned very realistic ‘forest canopy shadows,’ with empty 4'x8' frames filled with a mixture of found materials including sticks, macrame designs, plant fronds and whatever else seemed to fit. No frame was alike, and they worked amazingly well when placed in front of our light sources.”

Cameron found that blue skin takes well to green fill, so wherever hard sun hits patches of flora on the jungle floor, a soft green light bounces back. “By keeping most of our lights above the set and reflecting them off elements on the ground, we were able to make modifications very quickly by using Vari-Lites,” says Carpenter. He also made frequent use of large muslin solids that could be raised and lowered from a truss to soften the overhead sources.

At night, the jungle comes to life with bioluminescence, created on set with sticks of LiteRibbon hidden in the foliage. Vari-Lite VL2600s and SkyPanel S60-Cs were dialed in with two kinds of blue: ½ to ¾ CTB for general ambience, and a custom moonlight based on Lee 181 Congo Blue. Carpenter notes that Lee 181, favored by an older generation of cinematographers, “takes away 2½ to 3 stops of light, but you get this beautiful, saturated blue.”

2.39:1, 1.78:1 (3D)

Cameras | Sony Venice

Lenses | Fujinon MK, Premier ZK Cabrio