On The Walls: Virtual Production for Series Shooting

Television productions step into the world of LED walls and in-camera visual effects.

A limitless variety of interactive environments that can be captured in-camera on a single stage has been an attractive notion during the past year and a half. This brand of virtual production — which employs an LED wall, tracked cameras and real-time graphics rendering — requires less travel, and when combined with remote-capable equipment and workflows, offers additional critical advantages to shows working under safety constraints.

When the pandemic finally abates, it seems likely that the appeal of this methodology will endure — and that its proliferation within the world of episodic television, in particular, will continue. LED-wall setups expand the potential geographic scope of long-form story-writing, for example, without substantial change or cost to the postproduction workflow. And for episodics that take place within a limited set of locales, the costs for creating a few highly detailed 3D environments can be amortized over a full season, or multiple seasons. In addition, given the potential for a set physically populated essentially by only the actors and an operator — with other crew working remotely — filmmakers may be able to work with specific collaborators regardless of where they may be in the world.

Here, we offer an overview of some of the television shows that have already entered the realm of LED-wall virtual production.

Whereas some series, such as the Disney Plus Star Wars shows, were conceived for LED-volume environments — aka “mixed-reality” or “XR” stages — others pivoted to LED walls for VFX work as the medium became more accessible. ABC’s Station 19, an action-rescue show, traded on-location emergency-vehicle driving sequences for LED walls and rear-projected driving plates. Daryn Okada, ASC, a producer, director and cinematographer on the series, helped oversee the new approach starting in May 2020.

“Our first challenge was figuring out how to work within the safety protocols without a result that looked affected by those restrictions,” Okada says. “Virtual production was always in the back of my mind, but it came down to space; we couldn’t find a stage for a large volume for our standing sets because all of those stages were booked or on hold. But there were several plates we’d shot on location in Seattle that we could repurpose for driving sequences using a more modest LED wall.”

The Station 19 team worked with Sam Nicholson, ASC and his Stargate Studios facility to create a safe stage environment in Los Angeles. (Nicholson provided similar services for HBO’s series Run last year.) “Our main content screen was approximately 12 feet wide and modular, so we could use it easily with any vehicle from any angle,” says Okada. “We’d also roll in three large OLED monitors to wherever we wanted to see reflections in the rear and side mirrors or over the hood. We had four plates running together in sync with timecode, and the crew at Stargate kept track of exactly where we were in each take. So, if we wanted to pick up a new shot from within a scene, we could jump right to that piece of the background footage, and our coverage would match for continuity. That efficiency made a huge difference.”

Stargate’s ThruView real-time system transforms 2D footage captured as background driving plates into 3D scenes in Unreal Engine, so they can interact with the onstage camera via tracking. “We’re doing inside-out tracking using MoSys or outside-in with OptiTrack,” Nicholson says. “We can also follow the movement of the camera with a pan/tilt/roll, repeatable-move head. Remapping 2D imagery into a 3D environment provides a 2.5D illusion of depth to the camera, and our immersive kinetic lighting is key to our ThruView process.”

“The LED wall is fantastic for creating environments.”

Paramount Plus has incorporated in-camera visual effects into their slate of VFX techniques on two series: Season 4 of Star Trek: Discovery and Season 1 of its spinoff, Star Trek: Brave New Worlds. The shows share a 270-degree, 70'x30' horseshoe-shaped LED volume, constructed by Pixomondo in Toronto, which can be fed real-time animation from Unreal Engine.

“In the Covid era, being able to shoot large-scale locations without having to leave the stage is a huge benefit,” says Jason Zimmerman, lead visual-effects supervisor for both Star Trek series. “The LED wall is fantastic for creating environments, and on Star Trek, of course, traveling to different worlds is something we’re very interested in doing.”

“We’re using Roe’s Black Pearl BP2 2.8mm LED panels for the wall and the Carbon series CB5 5.77mm panels for the ceiling,” says Mahmoud Rahnama, head of studio/VFX supervisor at Pixomondo Toronto. “The ceiling is fully customizable, so we can either take panels out and hang practical lights over the volume, or just use the ceiling’s LEDs for lighting. We have more than 60 OptiTrack motion-capture cameras with the ability to track two cameras — [which are] on Technocranes, Steadicams, dollies and the like — simultaneously.”

Zimmerman notes, “A lot of shows are looking at this as an opportunity to advance filmmaking. Getting something in-camera on the day is so much better than greenscreen in many ways. One major difference [in terms of workflow] is that the production-design and art departments are a lot more involved much earlier in the process, because they have to get assets ready to be photographed instead of waiting until after the shoot.”

He adds, “If it weren’t for Covid, I probably would have been in Toronto for a few months helping usher the volume in. Fortunately, our team up there is phenomenal. I’m able to use [Nebtek’s] QTake [video-assist system] to log in remotely and watch as they’re shooting. If any issues come up, the directors or the executives can reach out, and I can see what they’re talking about. Visual effects has already been working with remote setups for a while, so when Covid happened, it took us a little time to adjust from being in an office. Other than having to get up a bit earlier for the time zone difference between Los Angeles and Toronto, everything else was second nature for us.”

(For coverage of Star Trek: Discovery’s Season 3, click here.)

Shows that planned to leverage in-camera VFX from the start include two that will be shot using StageCraft, Industrial Light & Magic’s real-time animation pipeline for LED volumes: The Book of Boba Fett, which will be released later this year, and Obi-Wan Kenobi, set for release in 2022. Both series will use the same volume initially built for The Mandalorian in Manhattan Beach, Calif. More Star Wars shows, including Andor, are in production at Pinewood Studios in England, where ILM has built another large StageCraft volume. Season 3 of The Mandalorian is now in production.

Meanwhile, Netflix is taking a holistic approach led by director of virtual production Girish Balakrishnan, with plenty of research and development into best practices. The goal is a standardized methodology that producers can consistently replicate across the many regions where Netflix produces original content. One of the first major XR-stage series out of the gate for the streaming service is 1899. Created by Jantje Friese and Baran bo Odar, 1899 takes place at the turn of the century aboard a migrant ship sailing to the United States from Europe. Initially pitched as a location shoot that would travel throughout Europe, the production was completely reconfigured for the volume — located at Studio Babelsberg in Germany — which measures approximately 75'x23'.

“The cameras and data are evolving so fast that today’s solution may be replaced by something completely different six months from now.”

Another major series in the works is HBO Max’s Our Flag Means Death. Taika Waititi directs the half-hour pirate-themed comedy, and also stars as the infamous Blackbeard. The show’s virtual-production strategy calls for surrounding a full-size pirate ship with a massive LED volume onstage to simulate the open sea.

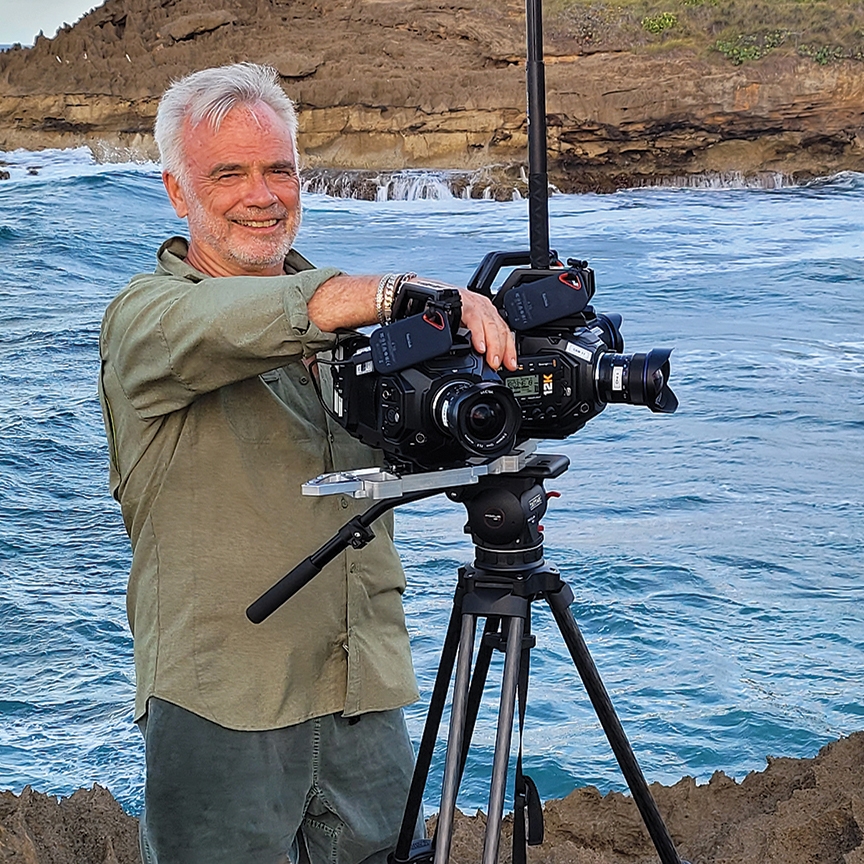

Nicholson brings his considerable expertise to Our Flag Means Death as virtual production supervisor. One task required him to shoot plates off the coast of Puerto Rico, using Blackmagic Ursa Mini Pro 12K and Pocket Cinema 6K cameras, paired with Sigma Art lenses. “We built a stabilized 360 rig with eight Pocket 6Ks in a circular array, each shooting onto a 4TB Sandisk SSD,” Nicholson says. “We followed that with a stabilized rig built on the Black Unicorn [remote] head with five 12K Blackmagic Ursa cameras. Multiple camera pano rigs present a real data-management challenge, with resolutions up to 60K for our final stitched images.”

“A lot of shows are looking at [in-camera visual effects] as an opportunity to advance filmmaking.”

For each plate shoot, Nicholson’s plate team captured more than 200 TB of footage. “Each take was five minutes, times eight cameras, which gave us 40 minutes of data per take,” he says. “We also want to show dailies, so we capture everything into a Blackmagic Atem Extreme ISO switcher, which gives us a 1080p eight-way split we can share from the location each day and discuss. Ultimately, our immersive plates are stabilized, de-grained and stitched so each scene can be played back through DaVinci Resolve into Epic’s Unreal Engine on set. The final image is then distributed across multiple Blackmagic 8K DeckLink [cards] to 14 4K quadrants mapped onto the massive LED volume from NEP Sweetwater.”

Nicholson sought ways to make the plate-capture both high-quality and cost-effective. “People tend to forget that the current LED volumes are 10-bit color with relatively low resolution,” he says. “So, it’s essential to match your cameras and your final content to the LED display from the outset. We are now using multiple high-quality cameras, lenses, memory, storage and playback at less than half the cost of what it was a few years ago. The cameras and data are evolving so fast that today’s solution may be replaced by something completely different six months from now.”

You’ll find the author’s previous reporting on the use of LED walls and game-engine technology in production here.