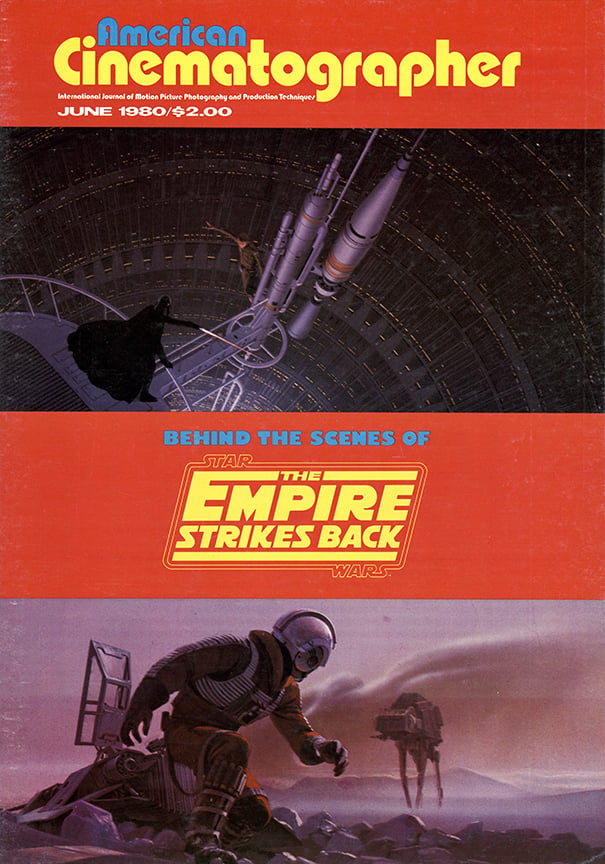

Special Visual Effects for Star Wars: The Empire Strikes Back

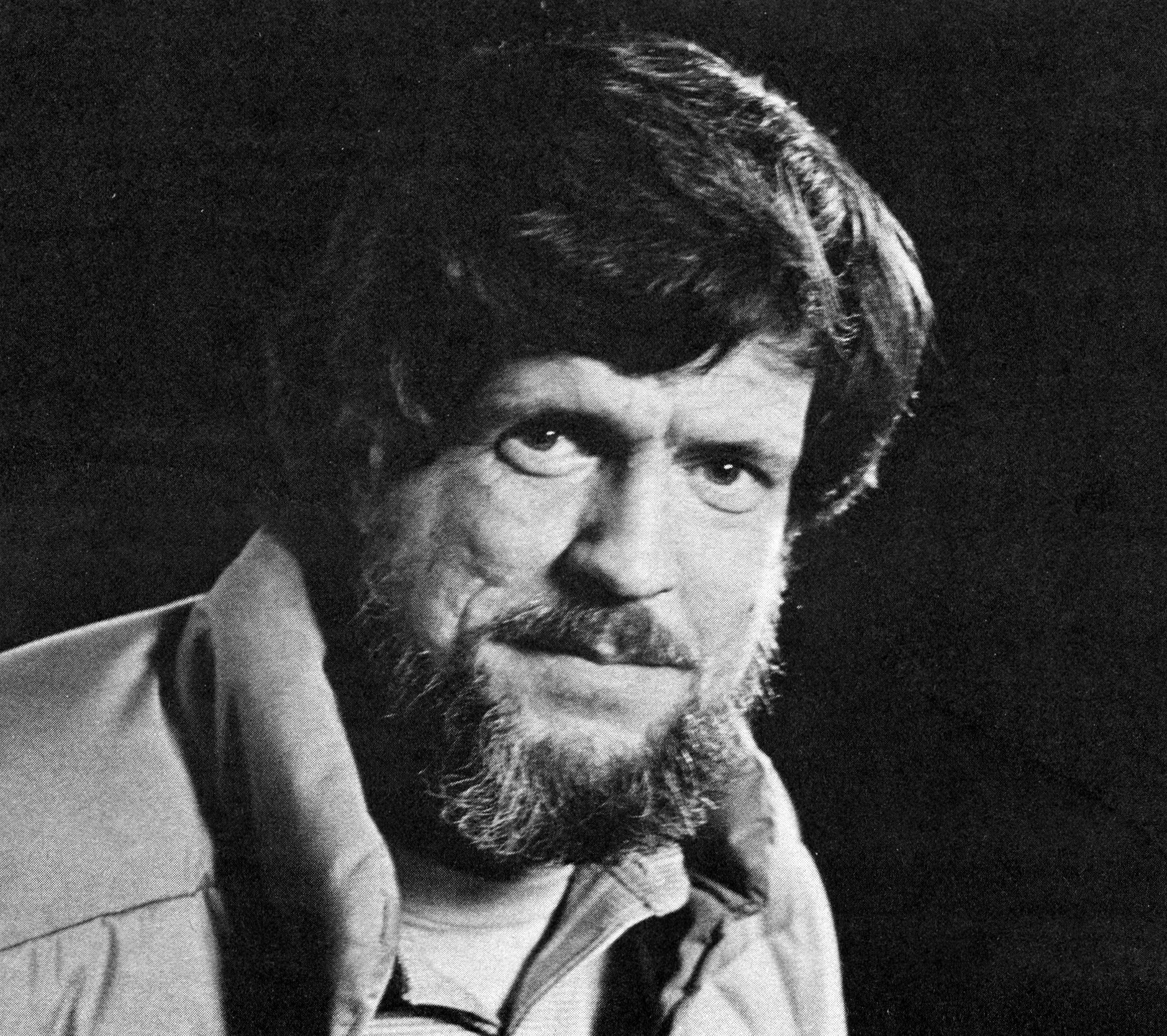

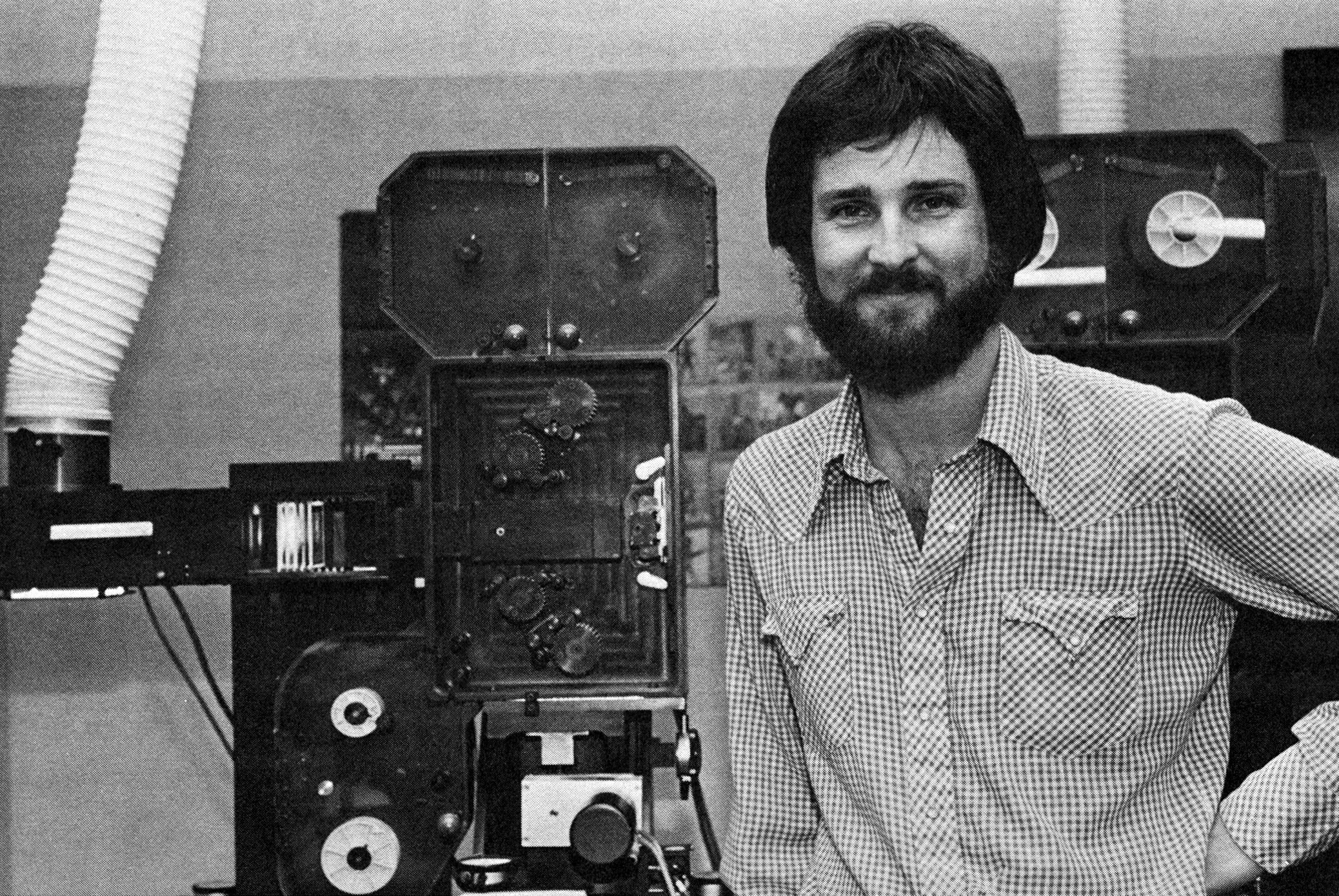

Richard Edlund, ASC discusses the complex issues at hand during the production of the 1980 sci-fi sequel.

The visionary zeal — the nuts and bolts — the blood, sweat and tears involved in creating this dazzling display of cinematic legerdemain.

By Richard Edlund, ASC

Special Visual Effects Co-Supervisor

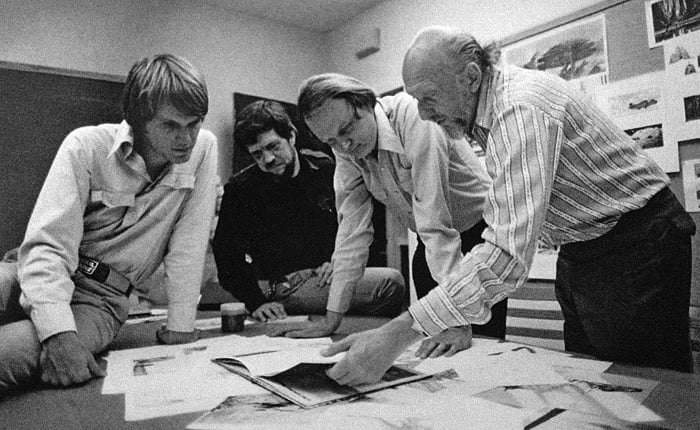

The most important consideration in setting up Industrial Light and Magic, the facility that produced the special effects for The Empire Strikes Back, was finding a staff.

We have an organization that is really dependent upon the inter-reaction of departments on a fairly immediate basis and our building is small, as compared to normal studios in the motion picture industry at large. It's somewhat of a disadvantage to have a space that is not quite big enough, but, on the other hand, it is an advantage in that it forces you to compact the departments closely together and to utilize this little nook and that little nook. The positive result is that all of us work close to each other and pass each other frequently in the course of doing our work, which is very complex in nature. The coffee machine is our most important meeting place, and when we pass each other in the hallway we can say, "What about the density in this scene?" This kind of thing happens in the normal course of getting from one place to another in the building, so we don't have the problem of departmentalization.

If the matte department were a block away from the optical department and the shooting stage were somewhere in the distance, we would lose that contact. If you are a block away, you may as well be miles away, because the work we do is so intricate and detailed and you get so involved in your aspect of it that unless you automatically tend to bump into each other you don't communicate.

One of our major problems in the beginning was to cope with the logistics of the various departments and decide how they were to be set up. This was difficult to do at first and we tried to look as far ahead as possible, because once you put an optical printer down and set up a department around it, that set-up gets fairly firmly entrenched. Things get bolted to the floor, utilities get piped in, air conditioning systems get installed. All of this is going to be there for quite a while, so you have to be careful about where you put things. This, together with the initial challenge of coming up into an environment (San Rafael) that was not oriented toward film, probably presented one of our chief difficulties in getting started.

Getting back to the problem of finding a staff — since we knew that there was no film labor pool, per se, in the San Francisco area (and certainly not that many people who had specific experience along the lines of the oddball type of work we were doing), it became obvious that we would have to draw most of our skeleton crew from Los Angeles. With that in mind, we tried to find the best people we could who would be willing to uproot their lives and move away from the hub of the film industry.

Bruce Nicholson (Optical Photography Supervisor), Dennis Muren (Effects Director of Photography) and I were approached at about the same time. Dennis and I were to share the work as effects cameramen, but I have been functioning more as a supervisor than a cameraman and have been enjoying that position. Lorne Peterson (Chief Model Maker) was a veteran of Star Wars. Conrad Buff (Visual Effects Editorial Supervisor) didn't work on Star Wars, but he had worked on an intermediate project with us, did excellent work and was definitely the person we wanted on The Empire Strikes Back. These were among the key personnel in the production departments.

In the support departments, such as machine shop and electronics systems development, there was Gene Whiteman, who became Machine Shop Supervisor and was a real lucky find. I didn't know him before we started, but he became a very good friend and was extremely adaptable to all of the many and varied projects that we wound up getting into. On a production like this every day brings something completely different. We don't know what's happening until we walk in and all of a sudden find ourselves faced with a problem and have to work our way out of it somehow. That's just the nature of the work.

Jerry Jeffress, who is from the Bay Area, became our Electronics Supervisor, and Kris Brown our Systems Engineer. The Stop-Motion Animators, Jon Berg and Phil Tippett, had done some work on Star Wars and were a very, very important part of this picture.

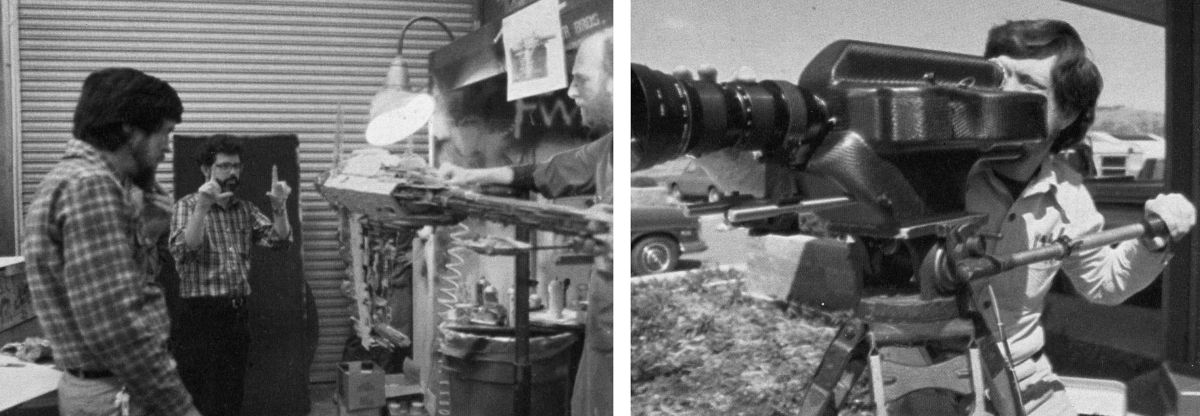

On Star Wars we had only two basic motion-control cameras, both of which worked very well almost from the outset. On Empire we had those two cameras, one other camera which I rented from Lin Dunn [ASC] for a while, and one high-speed camera which I rented from Paramount and which was capable of somewhere between 96 and 100 frames per second. Those were the cameras we had to work with — except for some shots that were done in anamorphic and which we filmed with standard cameras. Our work seemed generally to involve either a half-second to 30 seconds of exposure per frame or 100 frames per second or some other multiple of normal speed. Not much material was shot at sound speed, because we were always taking advantage of some quirk in the persistence of vision to effect our trick photography in one manner or another.

One of the projects which I initially started on was the building of a new high-speed movement in the VistaVision format by the Mitchell Camera Corporation. Only two of these had been made in the 1950s, when the company was in full production with an enormous assembly line. Now they had to drag the blueprints out of their files and I knew it would take them several months to complete the two movements that I ordered.

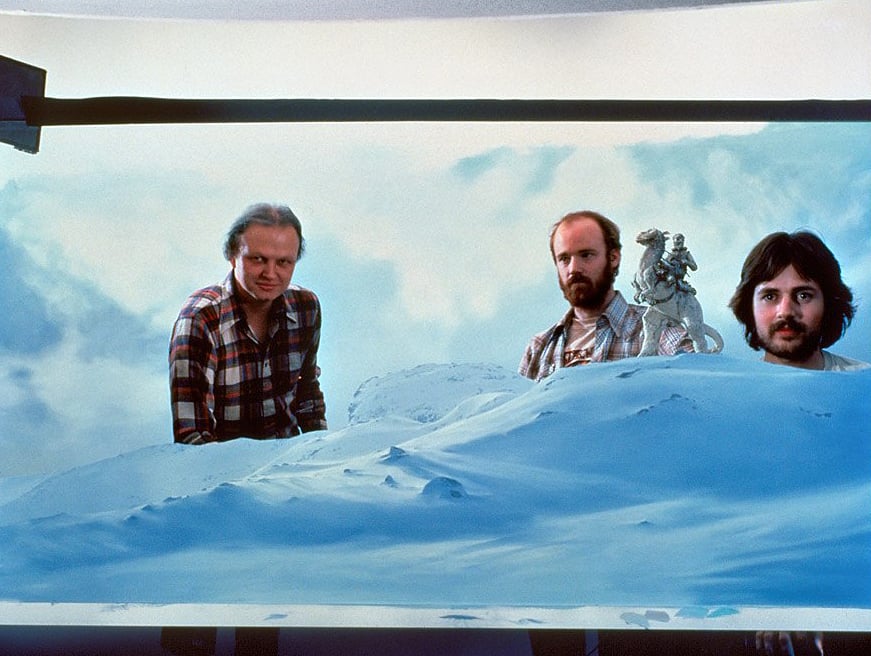

Meanwhile, we began design on a reflex VistaVision camera, which didn't exist at that time. We had a movement which we could use for that one and we immediately began building the housing, which we wanted to get finished in time for it to be delivered to the location in Norway. It had to have built-in heaters and be able to work at 30°F below zero. That was a real challenge and we came through on it. The reflex camera took three months to build and it worked fine. We finished it just sort of in the nick of time to use it on location

At the same time, the sound speed version was being built with a motion control system that would enable us to photograph motion-control work in the field. We could then bring the unit back to the stage and it would remember the exact tilts and pans that the operator made. The operator would not necessarily even be aware that it was a motion control camera. The system would merely memorize what he did and capture that information on magnetic tape. We could then bring the data back and put the camera in a miniature set on our stage and do a move that would fit exactly to that which had been made on location. That did not get finished in time for location shooting, but it was used in-house for shooting all of the stop-motion work.

That multiplied our motion-control cameras to three, the first one being the Dykstraflex, which was used for Star Wars, and its sister camera which runs on the same information base, although it looks entirely different. It will repeat the same move that the other camera made, so that a matching background can be photographed on the second camera. This allows the main camera to go on shooting the more complex shots or those that require greater flexibility.

Meanwhile, the high-speed camera was being developed and worked on by Gene Whiteman and master movement wizard Jim Beaumonte. We knew the design of the movement would be capable of at least 100 fps, which we have achieved at this writing, but we are planning further modifications to some of the parts and the movement in order to make the camera run at perhaps 150 fps — if we are lucky.

This meant that we now had four cameras. Then [co-visual effects supervisor] Brian Johnson found five Technirama cameras in England and we have been systematically dragging those out, one at a time, and more or less re-tuning them and putting up-to-date electronic drive systems into them in order to increase the number of cameras that we have available, so that we can commit a camera to each shot.

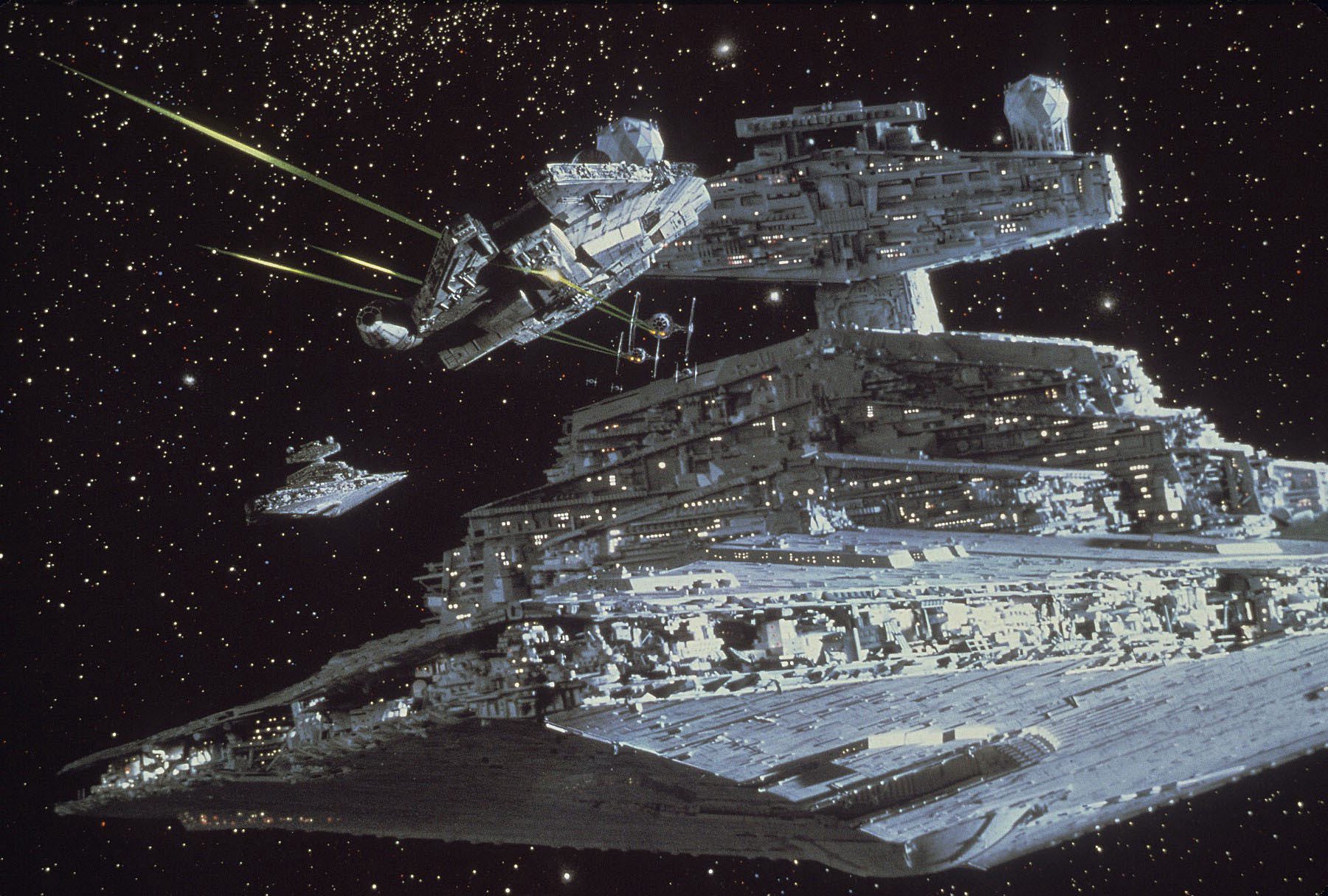

The system that we have is capable of a very high rate of production in that we can program a shot in, say, 15 minutes, if it's a very simple shot. A really complex shot may take two hours to get the motion program down. Then, once that is done, we can photograph a black-and-white test, develop it in-house, and look at it immediately. If it looks good, then we go on with it. That is fine for the main type of production shots (which involve careening spaceships, in a lot of cases) or background paintings or miniature shots in one form or another.

The thing is that there are certain shots that require tweaking. Because they are R&D shots of a type that you haven't exactly done before, you are taking a chance. In order to do that kind of shot, you have to commit a camera to the shot for a period of time, and if you don't have enough cameras to commit to such shots, then you have to shoot the scene, give it your best shot, and go on. The more cameras we have to commit to shots, the less personnel we have to tie up on each set-up. One person can work from set-up to set-up. He can do a test on one set-up, while he moves on to another set-up and leaves the first camera where it is. This system enables us to try a greater variety of shots and spend more time on certain problems that require finessing. We'll have probably eight cameras before we are finished.

This brings me to the new VistaCruiser camera which we are now working on. It will have an 80' track, as opposed to the 42' track that we have now. It will have a longer boom arm, be a steadier camera and have a greater film capacity. It will also have a better video viewing system, a better follow-focus system and a better motion control system.

Our final vision (or our fantasy) is to make an electronic control system which will feed all of the cameras, including the printer, the Oxberry animation stand and all of the departments, so that the various pieces of equipment can "talk" to each other. For example, let's say that there is a shot which, because of limitations of space or the length of the track, you can't make as long as is needed. Then, you would either want the printer to continue moving back on it at the same rate the camera was going and maintain a similar trajectory, or you would want the Oxberry animation camera to continue on, or you would want the Oxberry to shoot the background—but the various pieces of equipment would all be speaking a common language. This means that we could move back and forth from department to department and thereby increase the scope of the type of work that we can do.

Actually, the tracking camera we built for Star Wars is fairly primitive. At the time it was the best we could do, being pretty much under the gun, as we were — and we didn't have the success that we now have behind us. We were all learning, so we took the most conservative approach in terms of building the system. I think that was a really good move because, first of all, it worked when we plugged it in and we didn't have the heartaches of finding out that it wouldn't work. We knew that once we learned how to use the equipment, it would be much more of a sure thing — so there is a lot to be said for that approach when I lay back and talk about our "fantasy" system.

You have to work into a system like that slowly, a step at a time, because if it gets too complex, you will wind up with something that won't work unless it all works. We don't want to get into that position, because we all know that when you have a lot of components that are all prototypical, you're going to have problems and there are going to have to be people working out those problems all the time. So if you try to build the thing all at once to be the "ultimate" system, you will wind up failing. I think you have to go a step at a time.

The system that we have now is not a computer system. It's a solid-core memory system, and the electronics very precisely remember what you've done in programming a shot. It's all done by humans and not by computers — which brings us to the subject of our philosophy of motion control.

I would say that there are two basic philosophies of motion control. One is the concept of letting it all be done by mathematics, or depending heavily on the mathematically based move. The other concept allows the human being to program the move. When the mathematician programs the move, it comes out in a perfectly mathematical parabola. The shot is so perfect that it is not interesting. The trajectories are all perfect — and predictable. On the other hand, if you enter the human element into it — which is what you'd really prefer if you wanted the material you are photographing to have something of the look of a guy out there with a hand-held Arriflex shooting it — then you would have a certain suspense. It would be very peripheral; you wouldn't know why it was there — but you would know that the shot had not been done by a machine. Therefore, your material would have an effect that is altogether different from that of material that has been programmed mathematically. Not that there aren't certain cases in which you would want to use mathematically-based material. there are specific times when you do want to have that precise kind of control and it would be very agonizing to try to get the other way. But, in our operation, we tend to lean toward the human operator interface, rather than the mathematical interface. However, the new system that we are contemplating will be able to do both, and it will have an essentially unlimited number of channels.

The system that we have now has 12 channels. You have your pan, tilt and roll. Then you have your track (for the camera) and your model track and, let's say, three functions for moving the model in X, Y and Z pitch, yaw and roll.

Most of the time you don't need all of those channels. Almost invariably you use pan and tilt; not always do you use roll; not always do you use boom, but you always use the track. I would say that we use five or six channels per shot, on the average. I've only had two or three shots where I've used all twelve channels. But they can come in handy when we have situations where we might want to motorize a lighting effect, or there is a miniaturized actor as a model that turns his head or lifts his shoulder or does something that takes the deadness out of the stick figure.

The new system will be modular. It will have four-channel components, so that you can add more axes in motion simply by adding more banks of computer cards. What we are really trying to do — as much as we are involved in equipment or “technolust”— is to make the system fun to use, so that the operators can exercise their ideas and add to the product in the most facile manner.

To execute the complex three-dimensional stop-motion animation in The Empire Strikes Back, we have Jon Berg and Phil Tippett. These are two animators who have knocked their heads against a wall for years and years and have really learned their art and they are truly adept men. I think they are the greatest and they have really done some marvelous stop-motion work on Empire, including quite a lot of pioneering in the art.

I've already talked a lot about our main tool, which is motion-control photography and which allows us to repeat identically the same move once it has been programmed. The other major tool that goes hand-in-hand with that is the bluescreen process.

I had considerable experience with the bluescreen process during the years of 1963 through 1967 when I was working with Joseph Westheimer, ASC — who was my mentor in the business. I was a photographer prior to that and, through his good offices, I worked with him for four years and learned a great deal about special effects. We started working out the early problems of bluescreen while I was associated with him.

I became extremely enamored of the process, because it enables one to extract a perfectly fitting matte from the same negative that the image is on. There are many ways of doing bluescreen, and every self-respecting optical house has its own bluescreen technique. They are all somewhat different. Some of them work well and some of them work in certain circumstances and not in others. Some don't work at all.

I remember when I first suggested to George Lucas that we shoot all the Star Wars material in England by means of bluescreen, rather than front projection. I knew I was really sticking my neck out, because there is nothing that makes a director more paranoid than going to dailies and seeing all of his perfectly timed and matched action with a blue background that, by itself, is totally useless. He is, thereby, putting himself completely in the hands of the person who is going to do the special effects. Since, at that time, we hadn't had any experience with him, and we were learning also, it was rather tenuous. But we wound up doing it that way, since the front-projection techniques didn't work out to be logistically possible.

We worked out a system of doing bluescreen on Star Wars and it was adequate for the type of work we were doing at the time, which was mainly spaceships over star backgrounds. In deep space you have a lot of license that you can take because nobody has ever been there and we don't really know what it looks like. Besides, all you have to do is matte out stars to keep them from going over an object and do it so that the matte lines won't show.

There are some perfect matte shots in Star Wars. The system that we worked out in the meantime is based on a continuous tone film as the matting stock, rather than high-contrast at all in the matting process, except for garbage mattes or something like that. The thing is that the edge quality of high-contrast film is so different from that of color negative that you wind up clipping the matte lines and clipping the edges of the film. That works out pretty well in a shot that moves along (if you have a one-second cut you may not notice it), but if you get into a shot that plays for any length of time on the screen, it doesn’t work when it slows down past a certain point. So we’ve developed the system now so that we are able to achieve perfectly believable matte shots in most cases, and we would, I think, be able to do it in all cases if we had as much time as we'd love to have.

The bluescreen process is dependent upon good photographic technique, probably more so than it is on the printer that you work with. But the general optical systems that are available and are designed basically for the workhorse types of opticals (fades, dissolves, reductions, flops and the various blow-ups for getting rid of the mike booms) — that type of printer is not acceptable for composite photography, if you are doing a great amount of it.

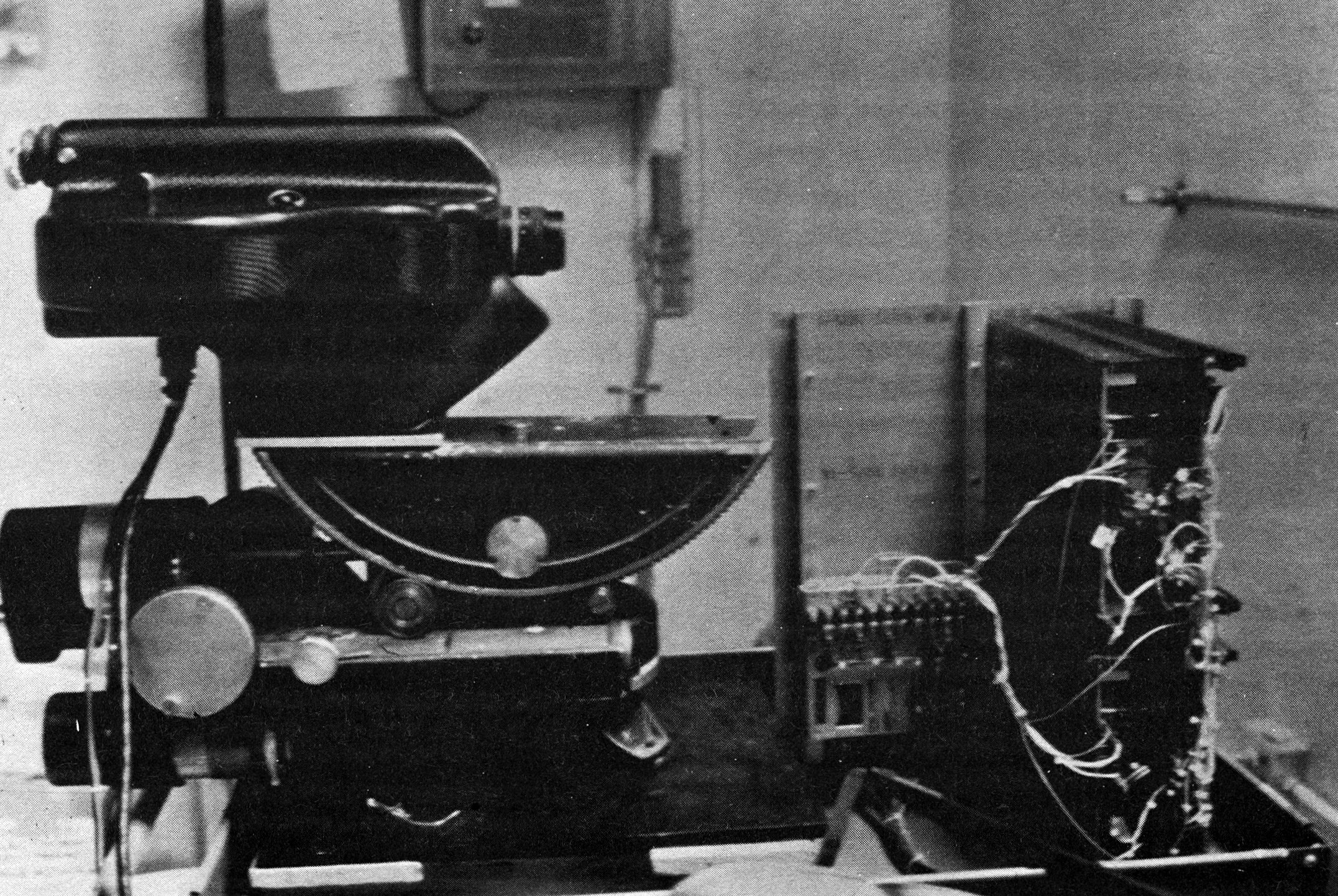

Consequently, we designed a printer for composite photography that has a couple of basic differences from the standard optical printer. We were able to design a printer for doing bluescreen work and it has the finest possible optics that could be achieved within the time frame. In fact, even if we had had more time, I don’t think we could have gotten a better lens.

We designed what is actually a four-headed, optical, beam-splitter printer. It takes two pairs of VistaVision projectors, each pair with a relay lens that is distortion-free between it and an anamorph that then takes the VistaVision image and reduces it to a 2-to-1 anamorphic ratio suitable to intercut with the film, which was shot in Panavision [by Peter Suschitzky, ASC]. This lens has exceeded our expectations. In about 30 to 40 percent of the shots we have actually had to degrade the image in order to make it look real. It looks too crisp in certain cases and we have been in a position of degrading the image to make it fit, rather than degrading the background to make the image work with it. So I think we've achieved a marked degree of success with our new printer and the results will show on the screen.

On Empire, just as on Star Wars,we have used lenses with tilting mounts for shooting our miniatures. That was the first thing I decided we needed on the camera. It harks back to my days as a still photographer. I started out with a view camera and soon learned the advantages of being able to tilt the lens to achieve more depth of field than was normally possible when the lens was parallel to the film plane. If you are photographing (as you are in most cases) an object which is tilted toward the lens, such as a landscape going out before you, and you see the foreground 3' away and it goes back to infinity, and there are just clouds or a bald sky, you can achieve focus from two inches to infinity by tilting the lens forward. The cardinal sin in miniature photography is to have something soft in the foreground; that immediately brands it as a miniature, so you must have extreme control over your focus.

This is by no means the first time that motion picture lenses in tilting mounts have been used. Actually, the late Hal Mohr, ASC had a ball-joint 50mm lens in his goodie box, which he would drag out and astound people with occasionally when the need arose, so I'm really just carrying on the tradition. A tilting lens board, to me, is an absolutely necessary part of miniature photographic equipment. We have several focal lengths available with tilting mounts.

We chose Nikon lenses for our special effects work because they have the best range of optics for the SLR cameras. The fact that there are so many SLR lenses available is another good reason why VistaVision (or an 8-perf, pulled-across film format) is the best effects format. We have a range of lenses from a 13mm rectilinear wide-angle lens to a 1000mm mirror lens and all of the units in between. All of the focal lengths have one flange distance; they all have the same filtering size. They are all of the same color, so they intercut well. One doesn’t tend to be yellow and another one blue, causing that sort of problem. They are all very close to being the same in terms of the look they produce. By that I mean that one isn’t crisper than the other one. There are, of course, certain lenses that are the sharpest and that we use for specific purposes, but from the wide-angles to the longer lenses they are all about the same quality, which is an advantage. Another plus is that we are able to buy these lenses for a few hundred dollars each. We'd have to think a little bit harder about using VistaVision were it not for the range of lenses available in that format.

I'd like to go into a bit more detail about the cameras we built for The Empire Strikes Back. We decided that if we were going to go ahead and design a camera, several could be built. As I mentioned previously, we've built two at the present time. One of them has a range from sound speed to unlimited time exposure. the other one presently goes from 96 fps to one second, but at some point this camera will be capable of going from 96 fps to long time exposures, as well.

We decided to build a VistaVision reflex camera because a reflex camera is generally the most pleasant to use, the most versatile and you can see what you are shooting as you shoot it. You can also add video viewfinders to relay the exact image that you are photographing.

It's very tricky to design a mirror-reflex system and get it to a point where the image is steady, but, nevertheless, we decided to do that and we have done it. The camera is small. It uses Arriflex magazines, which is the only sane way to build a special-purpose camera, since part of the camera is already built into the magazine. You only have to drive your magazine and you have the loop formed for you automatically.

The magazine load is rather small — only 400', but still an improvement over the 200' load used in standard 35mm photography. At any rate, since these are Arriflex magazines, they can be changed rather quickly. Since our cameras were designed specifically as effects cameras, we were not interested in putting 2,000' magazines on them for production use.

Our camera design includes a bloop light which sends a pulse to the recorder if you need to record sound. It has a ground glass viewing device that permits you to put a film clip in the finder on pins that are registered to and lined up with the register pins in the camera. This makes it possible for you to look through the clip and compose a matte shot in relation to a miniature previously shot, or vice versa. You can line it up exactly and see the perspective of the other shot in the finder. You can match forced perspective shots and things of that nature. As far as I know, this is the only reflex camera that has that capability. There are rackover cameras that have it and it’s now a new idea. What we have designed is just a new implementation of an old idea. Much of our new effects technology has evolved that way, based on tricks that go all the way back to the original King Kong.

The camera has a reflex butterfly-type mirror shutter, which is similar to that used in most reflex cameras. The shutter is traditional in that it is one-to-one to the movement, which means that there is no chance of one frame getting a slightly less amount of exposure than the other frame. Such a problem would be much more pronounced in our work, since we have to deal with the image in duplication so many times. We have one shot in The Empire Strikes Backthat has 20 separately photographed bluescreen shots, each of which was put in as a separate item, and we managed to achieve matte density without matte lines of sufficient amount so that the scene didn't start to get milky after putting that many elements in.

Eastman Kodak has been very generous and very helpful to us on this production. We asked them to make 5247 on an Estar base for us and they agreed to do so. As a result, we shot all of our special effects on Estar base film, including the high-speed work and all of the separations, mattes and other elements. There were five different emulsions that we received on Estar base, 5247 being the first. The separation stock, 5235 had been supplied previously; 5369 had been supplied to the microfilm industry previously. I don't know about 5302, which we use for mattes sometimes. However, this was the first time they'd ever put 5247 on Estar. The live photography was not shot on Estar base, but the matte shots were wherever possible and this gave us a somewhat better dimensional stability than we were able to get on acetate base. Not that there is anything wrong with acetate base; it's just that because our parameters are so stringent and we deal with so many elements, there are many factors that play in our favor with Estar base. Even our daily prints came back on Estar, which meant that they didn't get chewed up in the projectors. Such accidents, which are common with acetate prints, would have killed us timewise, because we would have been forced to wait for reprints. The toughness of the Estar base 5247 was important to use, but our main reason for using it is its dimensional stability.

You’ll learn much more about the history of ASC members working in the visual effects realm here.

If you enjoy archival and retrospective articles on classic and influential films, you'll find more AC historical coverage here.