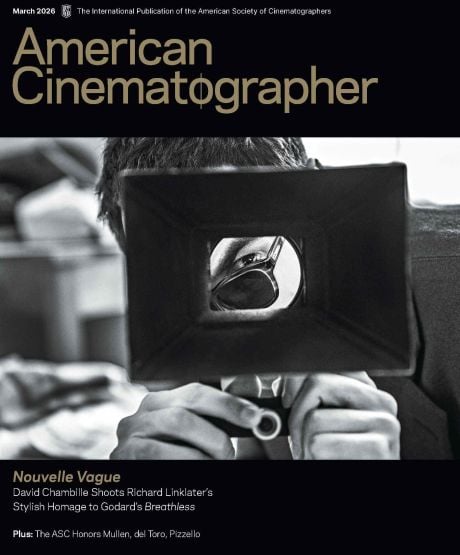

A Virtual World: The Matrix Awakens

State of the art and tech in The Matrix Awakens.

iconic “bullet time” sequence was designed for

The Matrix Awakens: An Unreal Engine 5 Experience.

Images courtesy of Epic Games

The Matrix Awakens, an interactive companion piece to the feature The Matrix Resurrections, offers a deep dive into a simulated world. Created in collaboration with writer-director Lana Wachowski and Epic Games for Sony’s PS5 and Microsoft’s Xbox Series X/S gaming consoles, the production blurs the lines between live-action cinematography and fully computer-generated imagery. The project — subtitled An Unreal Engine 5 Experience — is a demonstration of the cutting edge of game-engine technology and its ability to render complex imagery in real-time.

As impressive as this production is when viewed as a video game-style experience, it’s also important to note that the level of complexity, real-time rendering, and photorealism experienced by the game players — along with the technology that makes it happen — is a good indicator of the advances quickly coming down the pike for filmmakers working in virtual environments.

the screen.

What Is the Matrix?

With actors Keanu Reeves and Carrie-Anne Moss reprising their roles as Neo and Trinity, The Matrix Awakens opens with iconic shots from the first film of the Matrix trilogy, re-created in CG, and also includes a new scene exclusive to the gaming experience that was partially shot as live-action and incorporates motion-captured digital characters. The game then transitions to a playable action sequence — featuring intro dialogue between Neo and Trinity, appearing as their younger digital selves — and then an open-ended city exploration, showcasing the latest photorealistic real-time-rendering and complex-simulation capabilities of Unreal Engine 5, Epic Games’ latest software.

Epic’s team included CTO Kim Libreri, senior cinematics artist Colin Benoit, senior technical artist Peter Sumanaseni, art director Jerome Platteaux, and “digital humans” special projects supervisor George Borshukov. Creative producer and technologist John Gaeta was brought on as well, as was technical animator Marko Brkovic of Epic company 3Lateral Studio, and numerous others. Many of the team members had worked on the original Matrix trilogy in key technical and visual effects roles, helping to drive the groundbreaking visual effects techniques, including “bullet time.”

Cinematics Through Simulations

The project began in July 2020, Libreri reports when Wachowski inquired about using real-time animation and LED volumes for Resurrections. “She wanted to know how much development was needed ahead of shooting environments in a volume, and I explained the process,” he says. “So, we built the forest environment for the dojo scene, which was needed for the feature, in Unreal. The producers ultimately couldn’t find a suitable LED volume for the shoot in Germany — where a large portion of the production took place — so they went with greenscreen, and DNeg composited our Unreal environment into the shots during postproduction.”

However, working with the filmmakers on the forest guided the Epic team toward enhancements to its game engine, which would lead to the interactive experience that became The Matrix Awakens. “Deep in our philosophy was ‘cinematics through simulation,’” Libreri explains. “It’s not only the camera work — it’s providing the player with the ability to drive around a virtual city and perform physically accurate stunts. Much of our schedule was building a virtual city and developing a new version of the engine to render it.”

Digital Humans

The production divided the work with actors into live-action cinematography and motion capture. Wachowski and cinematographer Daniele Massaccesi, who also shot the Berlin portions of The Matrix Resurrections, photographed the live-action segments at Studio Babelsberg in Berlin, using the same Red Monstro cameras paired with Panavision Panaspeeds and a Zeiss 28-80mm T2.9 CZ.2 zoom that were employed on the feature. Additionally, Wachowski directed motion-capture sessions in Berlin using OptiTrack infrared mocap cameras and Cubic Motion head-mounted cameras.

After completing the live-action shoot of The Matrix Awakens, and amid the postproduction phase of Resurrections, Reeves, Moss and director James McTeigue traveled to 3Lateral Studios in Novi Sad, Serbia, for additional scanning and performance-capture sessions. According to technical animator Brkovic, the artists deployed various scanning techniques based on the level of detail required. “We did full-body mocap with head-mounted cameras to capture acting and physical performances,” he says. “We also used our mobile 4D volumetric scanner and a larger seated scanner comprising a partial sphere of machine-vision cameras to capture close-up facial performances. Finally, we have a full-body scanner to capture costumes and other physical details.”

To up the ante on the visual fidelity, the production sought out archived volumetric captures of the actors made during the original Matrix trilogy. “Warner Bros. provided us with archival data tapes from The Matrix Reloaded from a storage facility in a salt mine in Utah,” says Libreri. “It’s not just 2D archival footage — it’s full, universal-capture volumetric data captured in HD with an array of [five] Sony [HDW-F900] cameras. George Borshukov supervised this in 2002.” That data originally drove the virtual cinematography in The Matrix Reloaded for scenes such as the slow-motion brawl between Neo and many versions of Agent Smith (AC June ’03).

To produce the final digital characters for The Matrix Awakens, the team filtered the raw body and facial motion-capture data into Epic’s MetaHuman Creator, adding physical simulation, via mocap, and AI techniques to create realistic virtual character movement. “We’re not just capturing facial animation with something simple like a phone camera,” says Libreri. “We’re using high-resolution stereoscopic imagery. We also have a brand-new animation processing pipeline co-developed by 3Lateral and Cubic Motion,” both of which are Epic companies.

Virtual Shoot

Players experiencing The Matrix Awakens see the perspective shift between self-guided cinematic sequences and interactive sections. Epic labored to make their virtual cinematography match the look and feel of the movie’s live-action cinematography. Cinematics artist Benoit and technical artist Sumanaseni shepherded the work.

“We’re using ray-traced area lights and image-based lighting within Unreal Engine to mimic the look of the real-world cinematography,” explains Sumanaseni, whose career includes a long tenure at Pixar Animation Studios, where he worked on such titles as Finding Nemo, The Incredibles, Cars, Ratatouille, Up, and Brave. “In Unreal Engine 5, we have Lumen real-time global illumination. That means we’re lighting not only with the light source itself, but all the light bouncing off other surfaces and sources, which gives a richness and realism you don’t normally see in real-time animation.”

Because Unreal is a game engine, the team could interact with the process beyond the simple setting of keyframes for the virtual-camera movement. For example, while creating the vehicle action scenes, Benoit could drive virtual stunt cars directly. “I drove the vehicles around to block out an entire sequence, and then created the camerawork to go with those performances,” he says. “We experimented with using a [physical virtual camera] in the early days of the demo, but ultimately, the final cameras were driven along virtual paths to simulate dolly, crane and Russian Arm rigs, with keyed operation and motion-captured shake to mimic live-action camera imperfections.”

Sumanaseni was also able to add post-processing to the cinematography’s look to better re-create the iconic green tint familiar to fans of the Matrix trilogy. “The post tools in Unreal are pretty robust,” says Sumanaseni. “We can go down to the level of shadows and apply different color corrections to them from the rest of the image. We also discovered that the original green color correction was more prominent on the home-video versions of The Matrix than the theatrical release. So, we aimed for something more like what we collectively remember it looking like.” Libreri notes that they referred to his own “archive of the original graded digital shots from the movie — the rooftop bullet-time shot, in particular.”

Generating a Metropolis

After the car chase in The Matrix Awakens, players are deposited onto the streets of the virtual city, free to walk and drive wherever they wish. The photorealistic city combines visual elements of Chicago, San Francisco, Los Angeles, and other iconic urban landscapes. It encompasses 45,073 parked cars (38,146 are drivable), more than 160 miles of roads, and 35,000 MetaHumans. The city leverages Unreal’s Nanite virtualized geometry technology tool to render the entire area at once, without load screens or wait times.

“We had a special collaboration with SideFX, the makers of Houdini [3D animation software], to generate the city procedurally via an importer for their modeling data,” says Libreri. “Instead of modeling each building, road or car directly, we define parameters: ‘Is it a downtown zone? An industrial zone? A freeway?’ Then the engine fills in all the ‘super detail’ like building windows, concrete sidewalks, air conditioners on buildings, AI-driven traffic — everything. This technology is all very compatible with in-camera visual effects. The future is really like working in the Matrix, because you have control of everything with very high visual fidelity.”