User Friendly: New Options for Shooting In-Camera Effects

With virtual production on the rise, cinematographers take real-time control in VFX environments.

Shortly before the Covid-19 pandemic took hold in the United States, AC explored revolutionary new techniques for virtual production and in-camera visual effects that were pioneered on such projects as The Mandalorian (AC Feb. ’20). Since then, this approach — which combines 3D scenery rendered on large, LED-screen volumes synchronized with tracked cameras — has started to mesh with remote-collaboration techniques that have become increasingly prevalent due to the safety guidelines and travel restrictions imposed by the pandemic. Even independent productions and modest start-up facilities are now looking to virtual production and in-camera visual effects to create content safely, and this, in turn, has led to a major increase in the number of LED “smart stages” in operation throughout the world.

While virtual production relies on new technology, it also leverages longstanding approaches first developed for rear-projection — and, like rear-projection, it offers a firsthand look at a nearly complete image. The result is a controlled environment rich with visual possibilities that places image control in the hands of the cinematographer.

“It’s the cinematographer’s eye that matches the color, contrast, perspective and everything else about a composite shot.”

“It’s the cinematographer’s eye that matches the color, contrast, perspective and everything else about a composite shot,” says David Stump, ASC. “That used to be delegated to someone else, who could spend hours and hours doing that in post. Now, it has to be an instinctual decision made quickly onstage.”

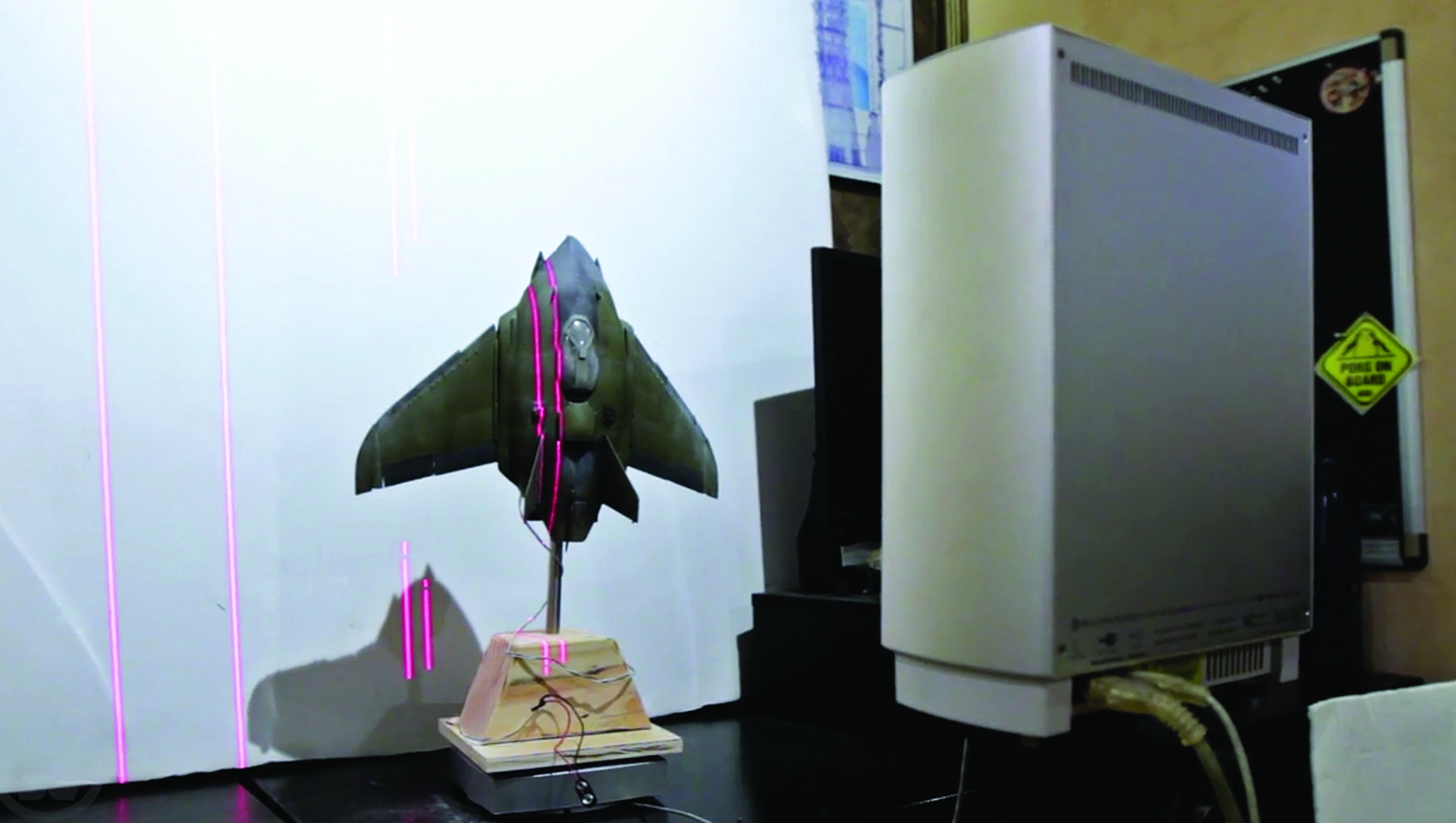

A seasoned visual-effects veteran who pioneered live-compositing techniques on 2006’s Red Riding Hood (AC April ’06), Stump is the visual-effects supervisor and co-cinematographer on Gods of Mars, an indie sci-fi feature that combines in-camera visual effects with traditional miniatures scanned into the digital realm via Lidar. The project, shot with Blackmagic Design’s Ursa Mini Pro 12K, is currently on hiatus, due to Covid-19. Work thus far was performed during the pandemic with a team dispersed throughout the U.S. — with the director, editor, composer, sound designer, animators and VFX artists collaborating online from Hollywood, Portland, West Palm Beach, Detroit, Dallas and Austin. The movie takes place during a civil war on Mars in 2099, and follows a disgraced fighter pilot who leads a group of mercenaries on a mission to take out a charismatic cult leader who’s spearheading a mining-colony rebellion.

“There are a couple dozen people in Hollywood who can say they’ve done many virtual productions,” Stump says. “I did environments and previs with [Unity Technologies’] Unity three or four years ago, but the notion of using a game engine like [Epic Games’] Unreal for final pixel production in-camera is new for everybody.”

Real-time game engines are the lynchpin for the virtual process. The final effect is highly convincing to the camera, incorporating realistic, interactive lighting and matching camera parallax.

Stump’s co-cinematographer Michael Franks immediately recognized the advantages of capturing complex visual-effects shots in camera versus creating them with greenscreen composites. With the latter technique, “the difficulty is always matching the light in the plate to the light in the image,” notes Franks. “You want it to be seamless to create the illusion that the subject is in a snowstorm or in the rain. With the LED volume, you’re already starting with what looks like a real place.

“The pandemic has also created a lot of restrictions on traveling to a location and dealing with larger crews,” he adds, “and this technique enables us to do certain things that would otherwise be very difficult to accomplish under the restrictions — and we can do them in a controlled environment.”

Gods of Mars director Peter Hyoguchi, who has a background in visual-effects supervision, sees a best-of-both-worlds solution in building practical miniatures and converting them into digital models that can be photographed in real time. “We’re using photogrammetry and laser scanning to capture the miniatures and light them,” Hyoguchi says. “The beautiful photographic flaws of nature are what make reality look real and CGI look artificial. The next level is using these digital models to capture complete shots in real time.

“Doing everything in-camera cuts our costs by 30 to 50 percent, and it’s much more efficient. I’m moving faster with a dozen visual-effects artists now than I was when I had 250 visual-effects artists on earlier projects.”

“I find the traditional CG pipeline tremendously time-consuming and expensive,” the director adds. “Doing everything in-camera cuts our costs by 30 to 50 percent, and it’s much more efficient. I’m moving faster with a dozen visual-effects artists now than I was when I had 250 visual-effects artists on earlier projects. Working in this new medium is wildly different, but it’s been a great learning process.”

Hyoguchi does not believe the need for fewer crewmembers will lead to less work for industry personnel — in fact, he foresees the opposite. “It’s going to increase the number of productions that are made globally, by a substantial amount. Because you’ll be able to make a complex-looking visual-effects movie for a lot less money, a lot more of them will be made, and then the risk carried by any one film failing at the box office will instead be spread across many films, which means a more stable ecosystem and increased opportunities for work.”

“Virtual production is just another tool in the box, but the toolbox is getting so big the need for education is greater than ever.”

“Even though large-scale shows like Westworld are using this technology already, I still feel like it’s in its infancy in terms of what’s possible,” Franks says. “I wonder what doors will be opened up later, like the ability to shoot multiple angles simultaneously. Also, as LED-module quality increases, we can worry less about getting too close to the screens and revealing any imperfections.”

Stump notes that the exponential growth in virtual-production techniques has created a parallel need to continually educate its practitioners. “It’s tough to spend enough time to learn all these new techniques,” he says. “Virtual production is just another tool in the box, but the toolbox is getting so big the need for education is greater than ever. That’s why the VES [Visual Effects Society] handbook is now so densely packed — there’s so much to know.”

“It’s in times of disruption that innovation can leap forward. A typical filmmaker may feel stalled right now, and that is an ideal time to look at new technologies and retrain.”

“I feel like I’ve been to the frontier, and it’s great,” says Hyoguchi. “People just need to learn the new techniques. You can make a very low-budget movie look like Lawrence of Arabia. If it wins at the box office, great. And if it fails at the box office, the studio is not going to go under. It’s like the stock market: Do you want to put all your money into Nike, or would you rather have a diverse portfolio?”

Gods of Mars benefited from support from Epic Games, which assists emerging virtual-production filmmakers via technical outreach and Epic MegaGrants. “The MegaGrant program was created to help people who want to do something with Unreal but don’t have enough funds,” says David Morin, head of Epic’s Los Angeles Lab. “Or there might be reluctance on some part of the team to use new technologies versus proven methods. The grants are there to help ‘de-risk’ a project.” The Gods of Mars filmmakers tell AC that an Epic MegaGrant is covering half the cost of the production’s experimental research and development.

Epic has enhanced features in Unreal Engine that are of particular use to cinematographers, says Morin. “For one, the Rest API allows you to extract some part of the Unreal Engine user interface and put it on a simpler interface where you can just move sliders. For example, a cinematographer can move the sky and alter the density of clouds or trees using an iPad — they don’t need to sit down in front of the full Unreal interface.” And with the planned 2021 release of Mels Studios, the system’s image quality will rise significantly.

Another critical cinematography tool that has been emphasized in Unreal is the virtual camera. “It’s a way to see your finished visual effects through the viewfinder or monitor as you’re shooting, by overlaying visual-effects imagery onto the live camera view,” Morin notes. “Unreal can now make a photoreal, real-time rendering of the visual effects in the digital world. That makes it possible to exercise your craft as a filmmaker and as a cinematographer on the digital scene without having to wait to see everything come together in post.”

Regarding the significant uptick in virtual production’s popularity since the advent of the pandemic, Morin notes, “It’s in times of disruption that innovation can leap forward. A typical filmmaker may feel stalled right now, and that is an ideal time to look at new technologies and retrain. In-camera visual effects have gone from a high-end technology used for major projects to something within reach of anyone.”

As more filmmakers express interest in virtual-production tools and techniques, smart stages are sprouting up to meet the demand. These stages often combine existing soundstage infrastructure with bespoke LED volumes ranging from smaller insert stages to stages capable of supporting elaborate feature work. For example, Mels Studios in Montreal recently unveiled its first virtual-production stage in collaboration with Arri and Epic Games. The stage uses 2.9mm Absen LED modules combined with a host of Arri equipment, including remote heads, Alexa cameras, wireless communications tools and Signature Prime lenses. The stage’s various services departments are linked together with the stage infrastructure via high-speed fiber-optic networks to support a decentralized, distanced production approach.

Epic Games also recently renovated its London Innovation Lab with a new, curved LED volume with partners Brompton Technology, Arri and production company 80six. The solution includes 144 ROE Black Pearl 2.8mm LED panels, 38 CB3 Carbon 3 3.75mm full-size panels and eight half-size panels. The displays are fed by Brompton Tessera 8 video processors calibrated via its Hydra measurement system for HDR-display capabilities. The volume combines LED screens for directly displaying content with a host of Arri lighting controlled by DMX to augment the light generated by the screens.

At press time, AC learned that Arri is currently at work on at least two LED virtual stages. One will be in Burbank for Arri, and the other will be for Arri Rental in the United Kingdom. The Burbank stage is scheduled for completion in January.

Dozens of other smart stages are in operation elsewhere in the world. These include Diamond View in the United States, Full/Frame/Figure in Italy, Mado XR in France, Orca Studios in Spain, Lavalabs in Germany, Ogel Sepol Productions in Brazil and Anvil Frame Studio in Russia. Each stage offers specific capabilities and different combinations of equipment and workflows, but they all take the same basic approach to delivering virtual imagery in camera that is nearly indistinguishable from live, on-location cinematography.

What’s becoming increasingly apparent is that there is a crucial role for the traditional cinematographer in this new paradigm. They provide the eye for realism in a virtual world, and virtual-production tools are continually being updated to address their needs and workflows. Buoyed by constant advances in CPU/GPU power and LED panel quality — as well as increased cost efficiency — virtual production seems likely to grow as it presents significant opportunities for new adopters ready to step into the virtual waters.

Frequent AC contributor Noah Kadner is the author of Epic Games’ Virtual Production Field Guide (a free PDF download). You'll find his prior AC report on the use of advanced game-engine tech in motion-picture production use here.